by Admin | Dec 11, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – the Strategic Computing Initiative was ended by DARPA in 1993. DARPA stands for the Defense Advanced Research Projects Agency, a research and development agency founded by the US Department of Defense in 1958 as the ARPA. Although its aim was for usage in the military, many of the innovations the agency funded were beyond the requirements for the US military. Some technologies that emerged from the backing of DARPA are computer networking and graphical user interfaces. DARPA works with academics and industry and report directly to senior DoD officials.

The Strategic Computing Initiative was founded in 1983, after the first AI winter in the 70s. The initiative supported projects that helped develop machine intelligence, from chip design to AI software. The DoD spent a total of 1 billion USD (not adjusted for inflation) before the program’s shutdown in 1993. Although the initiative failed to reach its overarching goals, specific targets were still met.

This project was created in response to Japan’s Fifth Generation Computer program, funded by the Japanese Ministry of Trade and Industry in 1982. The goal of this program was to create computers with massively parallel computing and logic programming and to propel Japan to the top spots in advanced technology. This will then create a platform for future developments in AI. By the time of the program’s end, the opinion of it was mixed, divided between considering it a failure or ahead of its time.

Although the results of the SCI and other computer and AI-related projects in the 80s were mixed, they helped bring funding back to AI development after the first AI winter in the 70s. The History of AI marks the Strategic Computer Initative as an important event in AI due to its revival of AI in the US, and its end signifies the second AI winter.

by Admin | Dec 4, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – Herbert Simon and Allen Newell developed Logic Theorist in December 1955. Logic Theorist is a computer program that is considered to be the first AI program. The program was designed to perform automated reasoning, the first to be so intentionally. It was able to prove the first 38 theorems from the Principia Mathematica by Alfred North Whitehead and Bertrand Russell.

Herbert Simon was an American economist, political scientist, and cognitive scientist. In addition to Logic Theorist, he was known for research into decision-making in organisations and theories of bound rationality and satisficing. He worked at Carnegie Mellon University for the majority of his career. Simon received the Nobel Prize in Economics in 1978, and the Turing Award in 1975 for contributions to AI, human cognition, and list processing.

Allen Newell was an American researcher of computer science and cognitive psychology at the RAND Corporation and Carnegie Mellon University. He collaborated with Herbert Simon in developing Logic Theorist and General Problem Solver, some of the earliest examples of Artificial Intelligence. In 1975 He received the ACM Turing Award, the most prestigious award in computer science, jointly with Simon.

The HAI initiative considers the development of Logic Theorist as important in the History of Artificial Intelligence. It was a pioneering research that was pioneering in the study of AI. Logic Theorist would also influence concepts in AI research, such as heuristics and reasoning as search.

by Admin | Nov 27, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – Yann LeCun, Yoshua Bengio, and others in November 1998 published a series of papers. They discussed neural networks and handwriting recognition, as well as backpropagation. One of them can be read here.

Yoshua Bengio is a Canadian computer scientist, most notable for his works on neural networks and deep learning. He is an influential scholar, being one of the most cited computer scientists. In the 1990s and 2000s, he helped make deep advancements in the field of deep learning. Bengio is also a Fellow of the Royal Society.

Yann LeCun is a French computer scientist, renowned for his work on deep learning and artificial intelligence. He is also notable for contributions to robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at NYU. In addition, LeCun is the Chief AI Scientist for Facebook.

This is recognised by the HAI initiative for further pioneering works in artificial intelligence, notably in neural networks and backpropagation. Furthermore, both Bengio and LeCun were recipients of the 2018 ACM Turing Award for their contributions to deep learning, which is an aspect of artificial intelligence. Thus, the initiative considers this an important event in AI history.

by Admin | Nov 20, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – Rodney Brooks published “Elephants Don’t Play Chess” in 1990. The paper proposed a “group-up approach” to developing AI, in contrast with Classical AI. Brooks dubbed this approach “Nouvell AI”. The paper can be read and downloaded here.

In the abstract, Brooks wrote – “We explore a research methodology which emphasizes ongoing physical interaction with the environment as the primary source of constraint on the design of intelligent systems. We show how this methodology has recently had significant successes on a par with the most successful classical efforts.”

Rodney Brooks is an Australian roboticist. He was a Panasonic Professor of Robotics at the Massachusetts Institute of Technology, as well as the former director of the MIT Computer Science and Artificial Intelligence Laboratory. Brooks also advocated for an actionist approach in terms of robotics. He also linked robotics to Artificial Intelligence.

The History of AI initiative sees this paper as important because it shows an alternate vision for AI and its development. Although it diverges from other methods of AI, it was still pioneering AI in a different way. Thus, HAI considers the paper as a relevant marker in the History of AI.

by Admin | Nov 13, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – Marvin Minsky and Seymour Papert published an expanded edition of Perceptrons in 1988. The original book was published in 1969. The original book explored the concept of the “perceptron”, but also highlighted its limitations. The revised and expanded edition of the book added a chapter countering criticisms of the book made in the twenty years after its publication. The original Perceptrons were pessimistic in its predictions for AI, and was thought to have been a cause for the first AI winter.

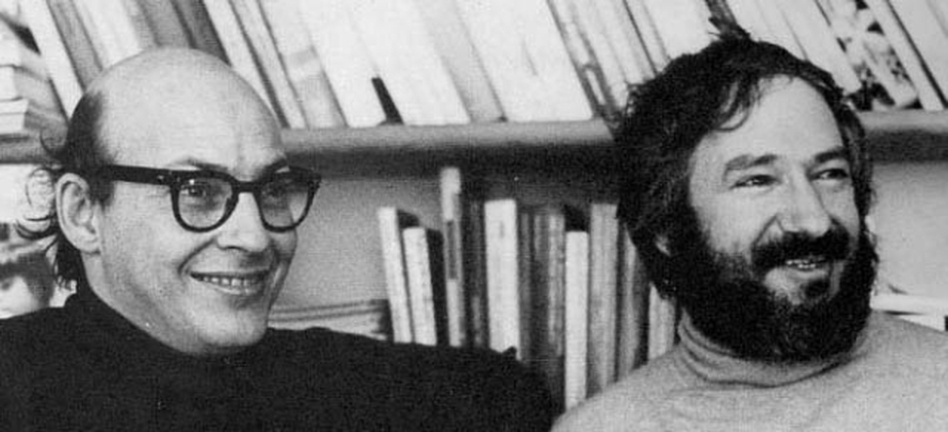

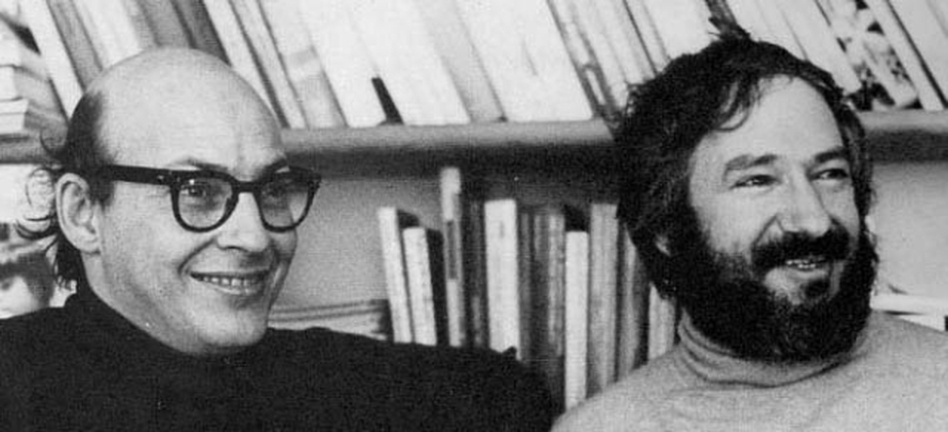

Marvin Minksy was an important pioneer in the field of AI. He penned the research proposal for the Dartmouth Conference, which coined the term “Artificial Intelligence”, and he was a participant in it when it was hosted the next summer. Minsky would also co-founded the MIT AI labs, which went through different names, and the MIT Media Laboratory. In terms of popular culture, he was an adviser to Stanley Kubrick’s acclaimed movie 2001: A Space Odyssey. He won the Turing Award in 1969.

Seymour Papert was a South African-born mathematician and computer scientist. He was mainly associated with MIT for his teaching and research. He was also a pioneer in Artificial Intelligence. Papert was also a co-creator of the Logo programming language, which is used educationally.

The History of AI initiative considers this republication important because it revisited and furthered discourses on AI. The original book was also a cause for the first AI winter, a pivotal event in the history of AI. Furthermore, Marvin Minsky was one of the founders of AI. Thus, HAI sees Perceptrons (republished 1988) as meaningful in the development of Artificial Intelligence.

by Admin | Nov 8, 2020 | News

Dr Lorraine Kisselburgh is the inaugural Chair of ACM’s global Technology Policy Council, where she oversees technology policy engagement in the US, Europe, and other global regions. Drawing on 100,000 computer scientists and professional members, ACM’s public policy activities provide nonpartisan technical expertise to policy leaders, stakeholders, and the general public about technology policy issues, including the 2017 Statement on Algorithmic Transparency and Accountability and the 2020 Principles for Facial Recognition Technologies.

The History of AI Board warmly welcomes Dr. Lorraine Kisseburgh.

by Admin | Nov 6, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – the ACM named Yoshua Bengio, Geofrrey Hinton, and Yann LeCun recipients of the Turing Award in 2018 for breakthroughs that made deep neural networks critical in computing. The Turing Award is one of the most prestigious awards in the field, as it is often considered the Nobel Prize of Computer Science. Other winners include Marvin Minsky and Judea Pearl, both of whom made enormous contributions to Artificial Intelligence.

Yoshua Bengio is a Canadian computer scientist, most notable for his works on neural networks and deep learning. He is an influential scholar, being one of the most cited computer scientists. In the 1990s and 2000s, he helped make deep advancements in the field of deep learning. Bengio is also a Fellow of the Royal Society.

Yann LeCun is a French computer scientist, renowned for his work on deep learning and artificial intelligence. He is also notable for contributions to robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at NYU. In addition, LeCun is the Chief AI Scientist for Facebook.

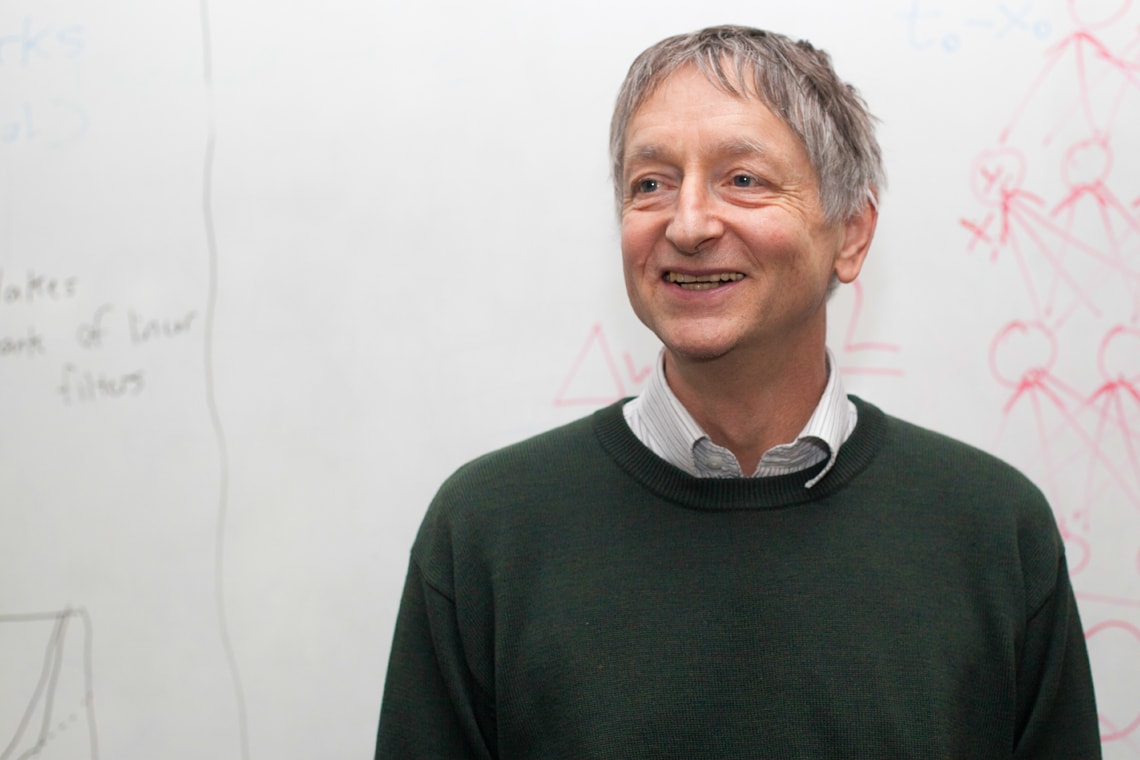

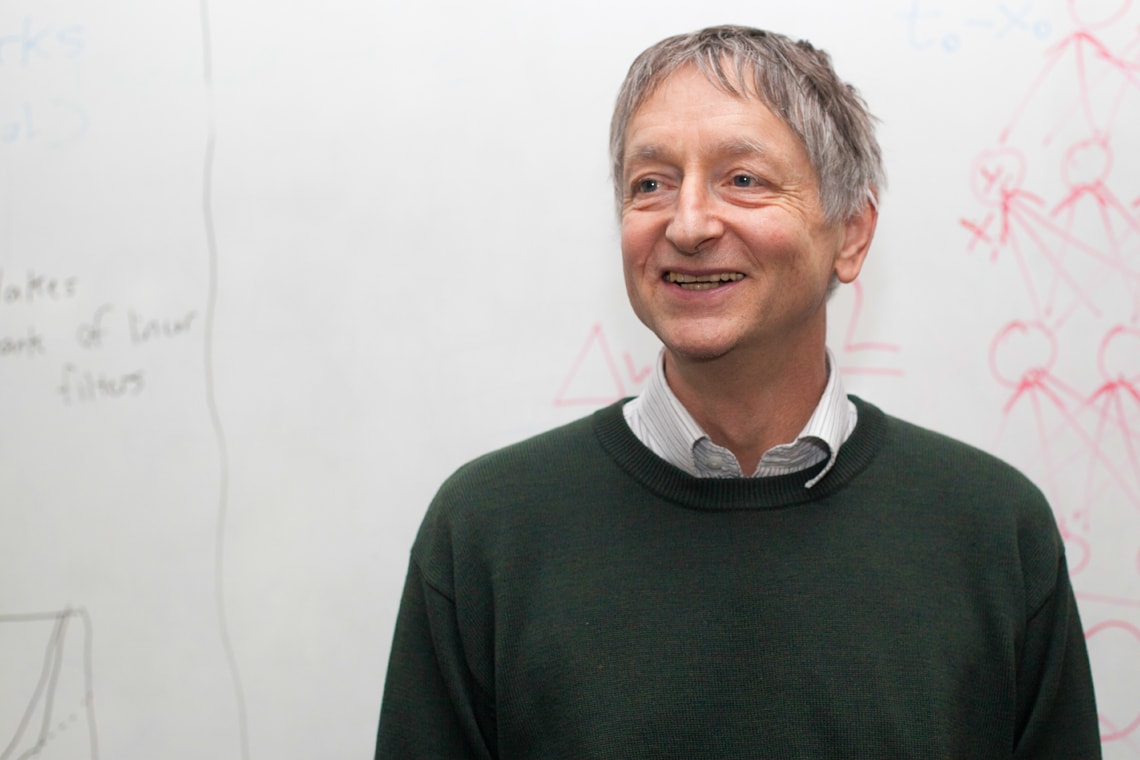

Geoffrey Hinton is an English-Canadian cognitive psychologist and computer scientist. He is most notable for his work on neural networks. He co-authored the seminal paper on backpropagation, “Learning representations by back-propagating errors”, in 1986. He is also known for his work into Deep Learning. Hinton, along with Yoshua Bengio and Yann LeCun (who was a postdoctorate student of Hinton), are considered the “Fathers of Deep Learning”.

The History of AI Initiative considers this award and the recipients important because they play an important role in Deep Learning, which is a field of Machine Learning, part of Artificial Intelligence. It is an acknowledgement of how far AI has developed, and thus, is a part of the History of AI.

by Admin | Oct 30, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – “Learning Multiple Layers of Representation” by Geoffrey Hinton was published in October 2008. The paper proposed new approaches to deep learning. In place of backpropagation, another concept Hinton introduced prior, Hinton proposes multilayer neural networks. This is so because backpropagation faced limitations such as requiring labeled training data. The paper can be read here.

Deep learning is a part of the broader machine learning field in Artificial Intelligence. The process is a method that is based on artificial neural networks with representation learning. It is “deep” in that it uses multiple layers in the networks. In the modern day, it has been utilised in various fields with good results.

Geoffrey Hinton is an English-Canadian cognitive psychologist and computer scientist. He is most notable for his work on neural networks. He is also known for his work into Deep Learning. Hinton, along with Yoshua Bengio and Yann LeCun (who was a postdoctorate student of Hinton), are considered the “Fathers of Deep Learning”. They were awarded the 2018 ACM Turing Award, considered the Nobel Prize of Computer Science, for their work on deep learning.

This paper is important in the History of AI because it introduces new perspective on deep learning. Instead of another ground-breaking concept like backpropagation, Hinton shows another method in the field. Geoffrey Hinton is also an important role in Deep Learning, which is a field of Machine Learning, part of Artificial Intelligence.

by Admin | Oct 23, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – the sudden collapse of the market for specialised AI hardware in 1987. This is due to the fact that computers from Apple and IBM became more powerful than Lisp machines and other expert systems. In the 80s, specialised AI hardware such as Lisp machines became very popular due to its effectiveness in the corporate world. However they were expensive to maintain. By the end of the decade, computers by Apple and IBM had catched up with expert systems, per Moore’s Law, and also at a far cheaper price. Because now consumers no longer require the more expensive expert systems, there was a collapse for the market of such things.

This collapse of the market led to what is dubbed the Second AI Winter. The collapse coincided with the end of the 5th Generation Computer project of Japan and the Strategic Computing Initiative in the USA. The expensive nature of expert systems and the lack of demand led to slowdowns in development of that field. Companies that run Lisp went bankrupt or moved away from the field entirely. Thus, the winter spelled the end for expert systems as a major player in AI and computers.

Expert systems are computer systems that can emulate man’s decision-making abilities. They are designed to solve problems through reasoning adn they can perform at the level of human experts. The first expert system was SAINT, developed by Marvin Minsky and James Robert Slagle. Lisp machines are designed to be able to run expert systems. Lisp machine runs the Lisp programming language, and in a way, it was one of the first commercial and personal workstation computer.

The fall of expert systems highlight lessons that are valuable for the History of AI and the current development of AI as well. It shows the failure to adapt by many in the AI field. The end of expert system in popular usage and the beginnings of the Second AI winter are also important milestones in the development of Artificial Intelligence. Thus, the HAI initiative considers this event an important marker in the history of AI.

by Admin | Oct 16, 2020 | Chronicles, News

This week in The History of AI at AIWS.net – David Rumelhart, Geoffrey Hinton, and Ronald Williams published “Learning representations by back-propagating errors” in October 1986. In this paper, they describe “a new learning procedure, back-propagation, for networks of neurone-like units.” The term backpropagation was introduced in this paper, and the concept of it was also introduced to neural networks. The paper can be found here.

David E. Rumelhart was an American psychologist. He is notable for his contributions to the study of human cognition, in terms of mathematical psychology, symbolic artificial intelligence, and connectionism. At the time of publication of the paper (1986), he was a Professor at the Department of Psychology at University of California, San Diego. In 1987, he then moved to Stanford, becoming Professor there until 1998. Rumelhart also received the MacArthur Fellowship in 1987, and was elected to the National Academy of Sciences in 1991.

Geoffrey Hinton is an English-Canadian cognitive psychologist and computer scientist. He is most notable for his work on neural networks. He is also known for his work into Deep Learning. Hinton, along with Yoshua Bengio and Yann LeCun (who was a postdoctorate student of Hinton), are considered the “Fathers of Deep Learning”. They were awarded the 2018 ACM Turing Award, considered the Nobel Prize of Computer Science, for their work on deep learning.

Ronald Williams is a computer scientist and a pioneer into neural networks. He is a Professor of Computer Science at Northeastern University. He was an author on the paper “Learning representations by back-propagating errors”, and he also made contributions to recurrent neural networks and reinforcement learning.

The History of AI Initiative considers this paper important because it introduces backpropagandation. Furthermore, the paper created a boom in research into neural network, a component of AI. Geoffrey Hinton, one of the authors of the paper, would also go on and play an important role in Deep Learning, which is a field of Machine Learning, part of Artificial Intelligence.