by Admin | Nov 12, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – the ACM named Yoshua Bengio, Geoffrey Hinton, and Yann LeCun recipients of the Turing Award in 2018 for breakthroughs that made deep neural networks critical in computing. The Turing Award is one of the most prestigious awards in the field, as it is often considered the Nobel Prize of Computer Science. Other winners include Marvin Minsky and Judea Pearl, both of whom made enormous contributions to Artificial Intelligence.

Yoshua Bengio is a Canadian computer scientist, most notable for his works on neural networks and deep learning. He is an influential scholar, being one of the most cited computer scientists. In the 1990s and 2000s, he helped make deep advancements in the field of deep learning. Bengio is also a Fellow of the Royal Society.

Yann LeCun is a French computer scientist, renowned for his work on deep learning and artificial intelligence. He is also notable for contributions to robotics and computational neuroscience. He is the Silver Professor of the Courant Institute of Mathematical Sciences at NYU. In addition, LeCun is the Chief AI Scientist for Facebook.

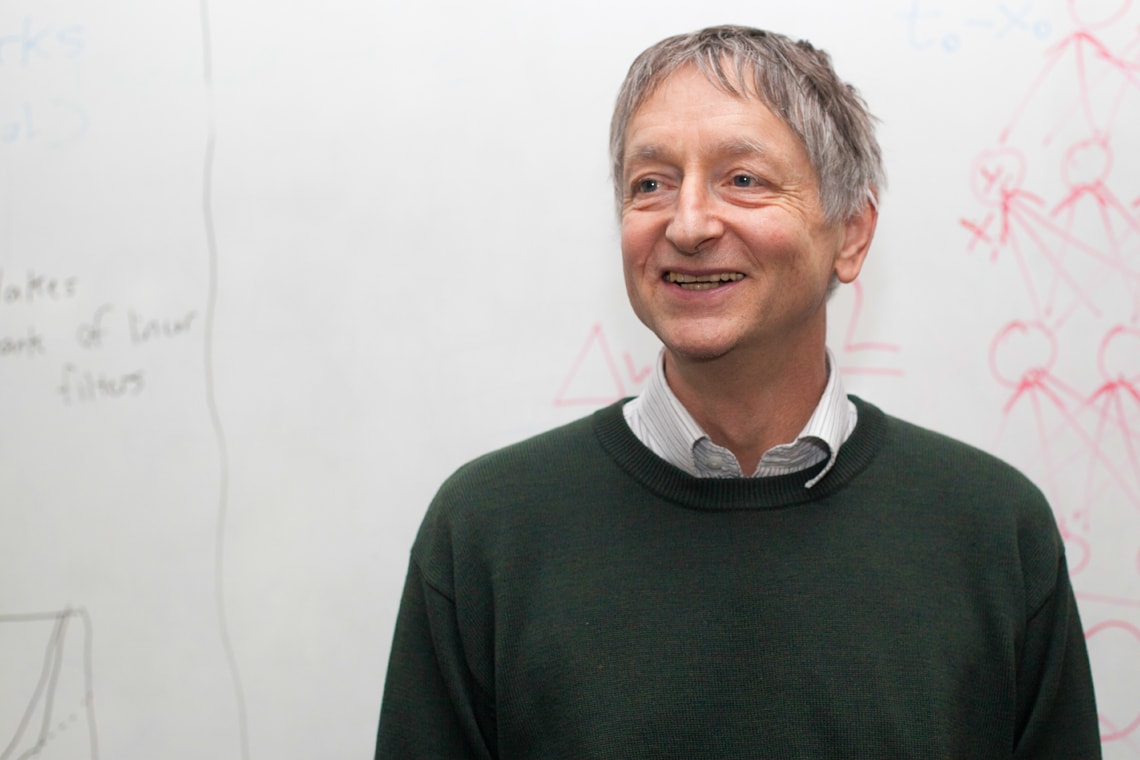

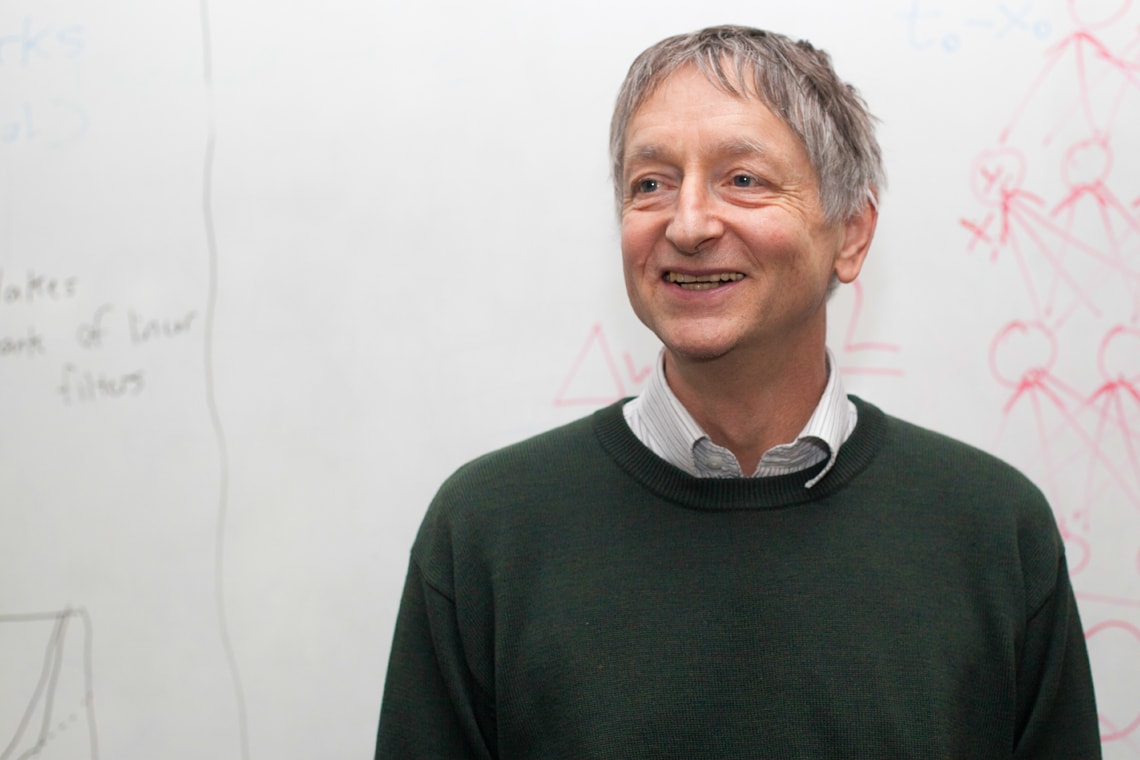

Geoffrey Hinton is an English-Canadian cognitive psychologist and computer scientist. He is most notable for his work on neural networks. He co-authored the seminal paper on backpropagation, “Learning representations by back-propagating errors”, in 1986. He is also known for his work into Deep Learning. Hinton, along with Yoshua Bengio and Yann LeCun (who was a postdoctorate student of Hinton), are considered the “Fathers of Deep Learning”.

The History of AI Initiative considers this award and the recipients important because they play an important role in Deep Learning, which is a field of Machine Learning, part of Artificial Intelligence. It is an acknowledgement of how far AI has developed, and thus, is a part of the History of AI.

by Admin | Nov 5, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – Marvin Minsky and Seymour Papert published an expanded edition of Perceptrons in 1988. The original book was published in 1969. The original book explored the concept of the “perceptron”, but also highlighted its limitations. The revised and expanded edition of the book added a chapter countering criticisms of the book made in the twenty years after its publication. The original Perceptrons were pessimistic in its predictions for AI, and was thought to have been a cause for the first AI winter.

Marvin Minksy was an important pioneer in the field of AI. He penned the research proposal for the Dartmouth Conference, which coined the term “Artificial Intelligence”, and he was a participant in it when it was hosted the next summer. Minsky would also co-founded the MIT AI labs, which went through different names, and the MIT Media Laboratory. In terms of popular culture, he was an adviser to Stanley Kubrick’s acclaimed movie 2001: A Space Odyssey. He won the Turing Award in 1969.

Seymour Papert was a South African-born mathematician and computer scientist. He was mainly associated with MIT for his teaching and research. He was also a pioneer in Artificial Intelligence. Papert was also a co-creator of the Logo programming language, which is used educationally.

The History of AI initiative considers this republication important because it revisited and furthered discourses on AI. The original book was also a cause for the first AI winter, a pivotal event in the history of AI. Furthermore, Marvin Minsky was one of the founders of AI. Thus, HAI sees Perceptrons (republished 1988) as meaningful in the development of Artificial Intelligence.

by Admin | Oct 29, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – the Alvey Programme was launched by the British government in 1983. It is a project developed in response to Japan’s own Fifth Generation Computer project. There was no specific focus or directive, but rather the program was to support research in knowledge engineering in the UK.

Originally, the UK was invited to Japan’s FGP, and they created a committee chaired by John Alvey, a technology director at British Telecom. In the end, they rejected Japan’s invitation and formed the Alvey Programme. John Alvey was not involved in this initiative itself though.

This project was created in response to Japan’s Fifth Generation Computer program, funded by the Japanese Ministry of Trade and Industry in 1982. The goal of this program was to create computers with massively parallel computing and logic programming and to propel Japan to the top spots in advanced technology. This will then create a platform for future developments in AI. By the time of the program’s end, the opinion of it was mixed, divided between considering it a failure or ahead of its time.

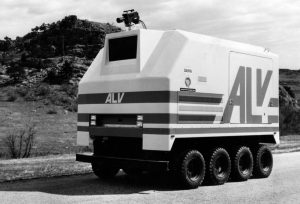

Another program that rivalled the Alvey Programme was America’s Strategic Computing Initiative, founded in 1983 after the first AI winter in the 70s. The initiative supported projects that helped develop machine intelligence, from chip design to AI software. The DoD spent a total of 1 billion USD (not adjusted for inflation) before the program’s shutdown in 1993. Although the initiative failed to reach its overarching goals, specific targets were still met.

Although the results of the Alvey Programme and other computer and AI projects (Fifth Generation and SCI) in the 80s were mixed, they helped bring funding back to AI development after the first AI winter in the 70s. The History of AI marks the Alvey Programme as an important event in AI due to its marker in AI development in the 1980s.

by Admin | Oct 22, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – Marvin Minsky and Seymour Papert published Perceptrons in 1969. The book explored the concept of the “perceptron”, but also highlighted its limitations. It was also pessimistic in its predictions for AI, and was thought to have been a cause for the first AI winter. There was a revised and expanded edition published in 1988, which added a chapter countering criticisms of the book made in the twenty years after its publication.

Marvin Minksy was an important pioneer in the field of AI. He penned the research proposal for the Dartmouth Conference, which coined the term “Artificial Intelligence”, and he was a participant in it when it was hosted the next summer. Minsky would also co-founded the MIT AI labs, which went through different names, and the MIT Media Laboratory. In terms of popular culture, he was an adviser to Stanley Kubrick’s acclaimed movie 2001: A Space Odyssey. He won the Turing Award in 1969.

Seymour Papert was a South African-born mathematician and computer scientist. He was mainly associated with MIT for his teaching and research. He was also a pioneer in Artificial Intelligence. Papert was also a co-creator of the Logo programming language, which is used educationally.

The History of AI initiative considers this publication important because it furthered discourses on AI, specifically on perceptrons. The book was also a cause for the first AI winter, a pivotal event in the history of AI. Furthermore, Marvin Minsky was one of the founders of AI. Thus, HAI sees Perceptrons as meaningful in the development of Artificial Intelligence.

by Admin | Oct 15, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – Unimate, an industrial robot developed in the 50s, becomes the first to work in New Jersey in 1961.

Unimate was invented by George Davol, who filed the patent in 1954. Davol met Joseph Engelberger in 1956, and the two paired up to found Unimation, the first robot manufacturing company. Davol and Engelberger promoted Unimate at The Tonight Show. Engelberger then exported industrial robotics to outside the US as well.

The Unimate worked at a General Motors assembly line at the Inland Fisher Guide Plant in New Jersey. The robot transported die castings from asssembly lines and welded parts on autos. It did this job because it was considered dangerous for human workers, due workplace hazards such as toxic fumes. The robot had the appearance of a box connected to an arm, with systematic tasks stored in a drum memory.

Although this machine was not directly connected to Artificial Intelligence, it was a precursor to developments in that field. By implementing a robot that can do tasks, this project was taking the first steps towards AI. Thus, the HAI initiatve considers this a milestone in the History of AI.

by Admin | Oct 7, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – “Learning Multiple Layers of Representation” by Geoffrey Hinton was published in October 2008. The paper proposed new approaches to deep learning. In place of backpropagation, another concept Hinton introduced prior, Hinton proposes multilayer neural networks. This is so because backpropagation faced limitations such as requiring labeled training data. The paper can be read here.

Deep learning is a part of the broader machine learning field in Artificial Intelligence. The process is a method that is based on artificial neural networks with representation learning. It is “deep” in that it uses multiple layers in the networks. In the modern day, it has been utilised in various fields with good results.

Geoffrey Hinton is an English-Canadian cognitive psychologist and computer scientist. He is most notable for his work on neural networks. He is also known for his work into Deep Learning. Hinton, along with Yoshua Bengio and Yann LeCun (who was a postdoctorate student of Hinton), are considered the “Fathers of Deep Learning.” They were awarded the 2018 ACM Turing Award, considered the Nobel Prize of Computer Science, for their work on deep learning.

This paper is important in the History of AI because it introduces new perspective on deep learning. Instead of another ground-breaking concept like backpropagation, Hinton shows another method in the field. Geoffrey Hinton is also an important role in Deep Learning, which is a field of Machine Learning, part of Artificial Intelligence.

by Admin | Sep 30, 2022 | Chronicles, News

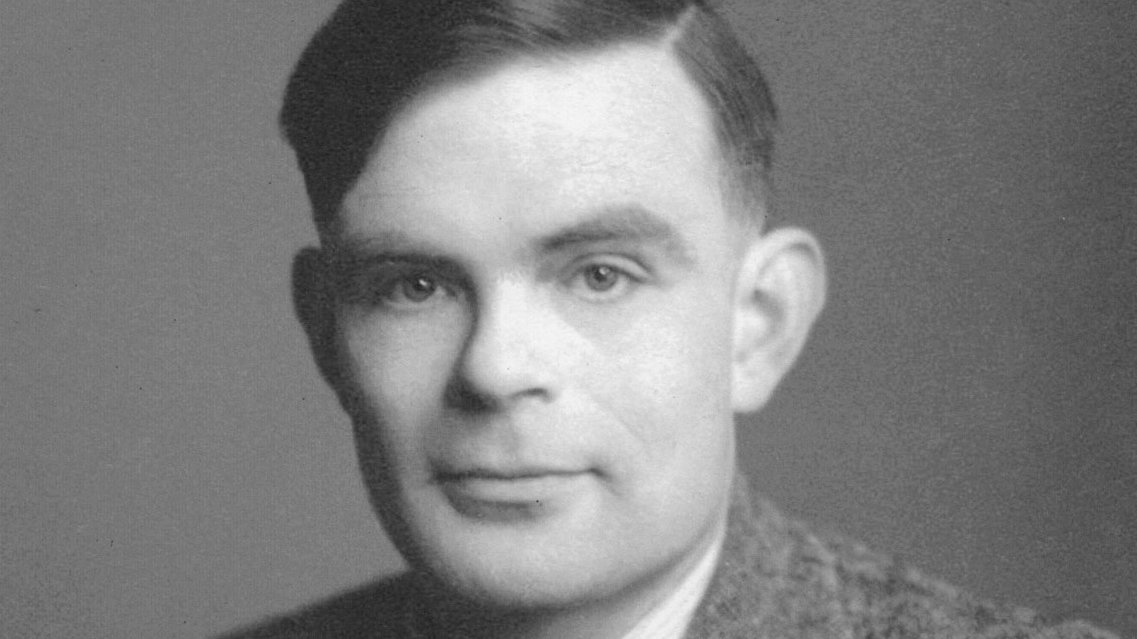

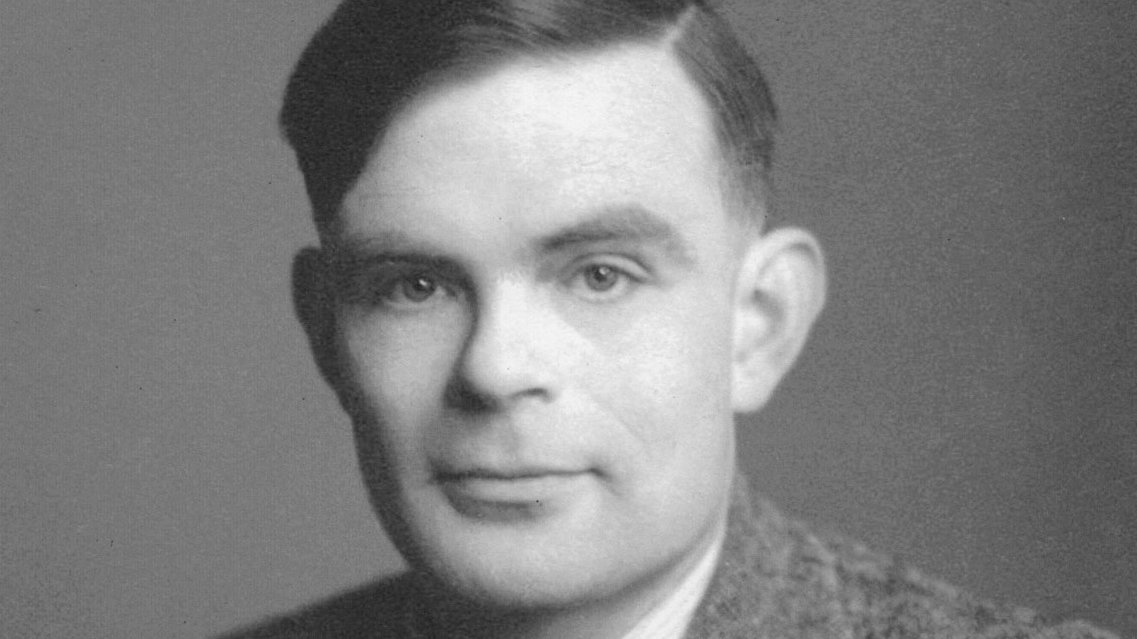

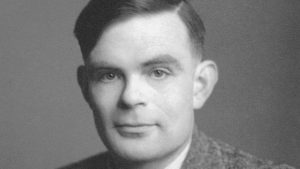

This week in The History of AI at AIWS.net – “Computing Machinery and Intelligence” by Alan Turing was published in the Mind quarterly academic journal in October 1950. It was the first instance that the “Turing test” was introduced to the public. The paper takes the question “Can machines think” and breaks it down. The paper also addresses 9 objections and arguments against Artificial Intelligence – Religious, “Heads in the Sands”, Mathematical, etc. Turing wrote about a potential “Learning Machine” that could successfully bypass the Turing test.

The Turing test, also known as the Imitation game, can be used to tell machines from humans. It poses a hypothetical, where a human evaluator would judge conversations between a machine designed for human-like responses and a human; if the evaluator cannot identify the machine from the human, then the machine passed the test. The test has proven to be both influential and controversial.

Alan Turing was a British computer scientist and cryptanalyst. He developed the Turing machine, a model of a general-purpose computer, in 1936. During the Second World War, he worked at Bletchley Park (Government Code and Cyper School) as a codebreaker for the United Kingdom. At his time here, he would play a critical role in solving Enigma, Germany’s wartime infamous encryption system. Solving Enigma helped turning the tide of the war in favour of the Allies. After the war, he would go on to develop the Turing test in 1950. Alan Turing is widely considered the father of modern Artificial Intelligence, as well as being highly influential in theoretical computer science. The “Nobel Prize of Computing”, the ACM Turing Award, is named after him.

The History of AI initiative considers this event to be important due to “Computing Machinery and Intelligence” being a seminal paper in regards to both Computer Science and AI.

The paper introduces many new concepts in CS and AI to the general public. Alan Turing is a pivotal figure in the development of Artificial Intelligence, computing, and machine learning as well. Thus, the publication of this paper is a critical moment in the History of AI.

by Admin | Sep 24, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – computer scientist Judea Pearl published Probabilistic Reasoning in Intelligent Systems in 1988. The book is, according to the publisher, about “the theoretical foundations and computational methods that underlie plausible reasoning under uncertainty.” The book covers topics such as AI systems, Markov and Baynesian networks, network propagation, and more. The Association for Computing Machinery (ACM) hailed the book as “[o]ne of the most cited works in the history of computer science” and that it “initiated the modern era in AI and converted many researchers who had previously worked in the logical and neural-network communities.”

1988 falls under the end of the second boom of AI, with the promotion of the Strategic Computing Initiative, Japan’s Fifth Generation, and other counterparts from other countries. The bust that followed it, known as the Second AI winter, lasted from the late 1980s to 1993. This bust was due to the perceptions of governments and investors, who believed that the field was failing, despite the fact that advances were still made.

Judea Pearl is a renowned Israeli-American computer scientist. He is a pioneer into Baynesian networks, probabilistic approaches to AI, and causal inference. He is also known for his other books, Causality: Models, Reasoning, and Inference (2000) and The Book of Why: The New Science of Cause and Effect (2018). Professor Pearl won the Turing Award, one of the highest honours in the field of computer science, in 2011, for his works into AI through probabilistic and causal reasoning. He is a Chancellor’s Professor at UCLA.

Probabilistic Reasoning in Intelligent Systems (1988) can be accessed through the ACM digital library, which also has other resources on computer science and AI.

Due to the impact that the book has, the History of AI initiative considers it an important marker in AI history. Professor Judea Pearl is one of the most influential computer scientists around the world. He is a Mentor of AI World Society Innovation Network (AIWS.net). Professor Pearl resides on the History of AI Board. He was honored as 2020 World Leader in AI World Society by Michael Dukakis Institute and the Boston Global Forum.

by Admin | Sep 16, 2022 | Chronicles, News

This week in The History of AI at AIWS.net – computer scientist James Robert Slagle developed SAINT in 1961. SAINT stands for Symbolic Automatic INTegrator. It was a heuristic program that could solve symbolic integration problems in freshman calculus. The machine was developed as a part of Slagle’s dissertation at MIT, with Marvin Minsky’s help. SAINT is considered the the first expert system. An expert system is a system that performs at the level of a human expert. SAINT was also one of the first projects that tried to produce a program that can come close to surpassing the Turing test as well.

James Robert Slagle is an American computer scientist. He worked on SAINT for his dissertation at MIT with Marvin Minsky. Slagle would receive his PhD in Mathematics from MIT later on in 1961. He’s a Professor in Computer Science with appointments in universities such as MIT, Johns Hopkins, UC Berkeley, and University of Minnesota.

Marvin Minsky also played a role in this project, as Slagle worked with him for this section of his dissertation. He would go on to be an important pioneer in the field of AI. He penned the research proposal for the Dartmouth Conference, which coined the term “Artificial Intelligence”, and he was a participant in it when it was hosted the next summer. Minsky would also co-founded the MIT AI labs, which went through different names, and the MIT Media Laboratory. In terms of popular culture, he was an adviser to Stanley Kubrick’s acclaimed movie 2001: A Space Odyssey. Minsky won the Turing Award in 1969.

The full dissertation can be found here. This project and dissertation is special in regard to AI, due to it being another step in its development, most notably for being the first expert system. Albeit it was only a minor project, the HAI initiative regards it as another pioneering attempt in the History of AI.

by Admin | Sep 9, 2022 | Chronicles, News

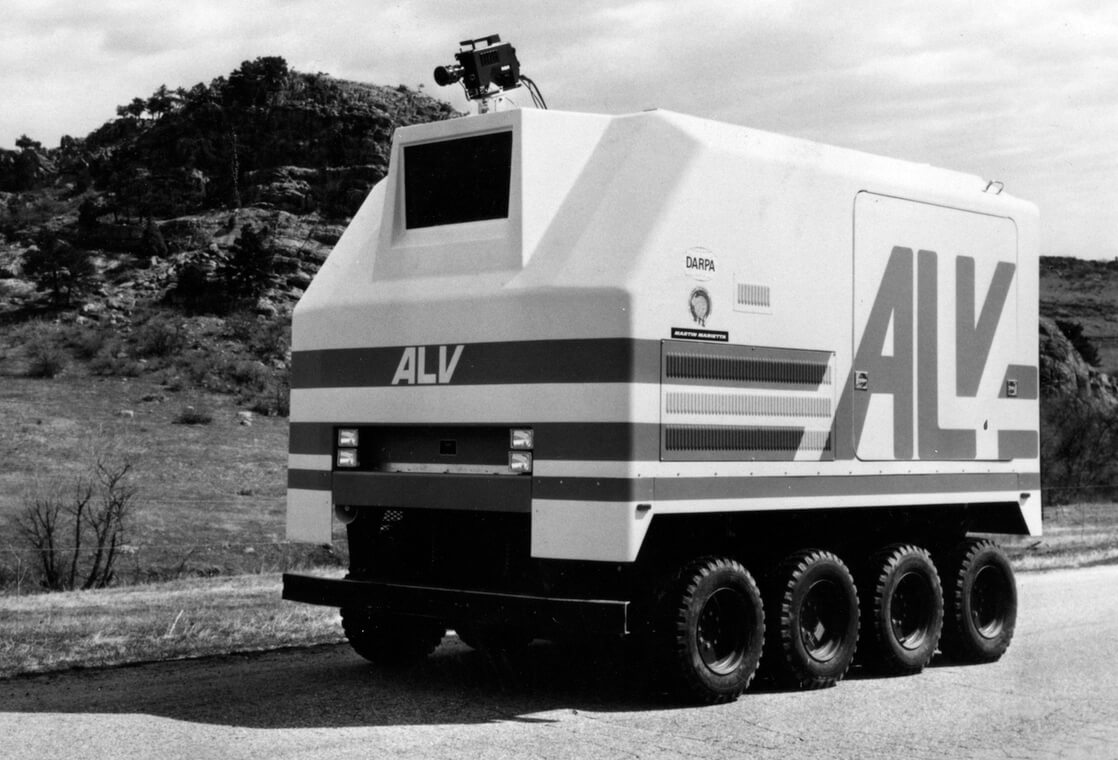

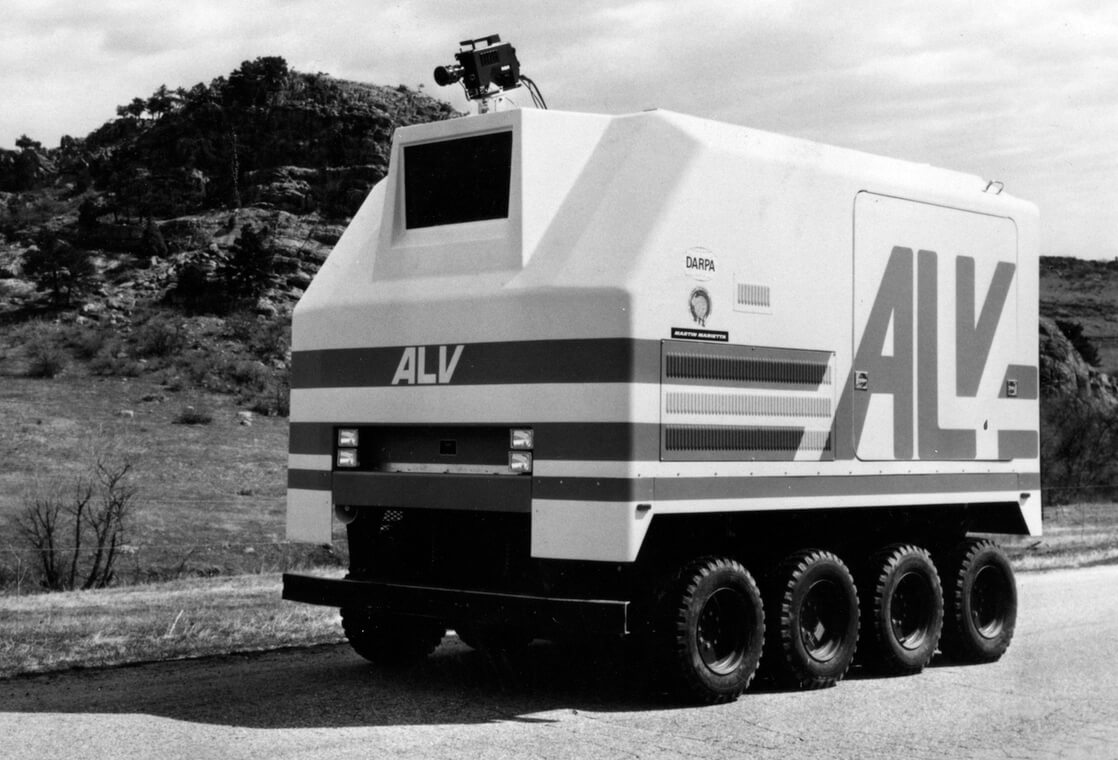

This week in The History of AI at AIWS.net – DARPA founded the Strategic Computing Initiative to fund research of advanced computer hardware and artificial intelligence in 1983. DARPA stands for the Defense Advanced Research Projects Agency, a research and development agency founded by the US Department of Defense in 1958 as the ARPA. Although its aim was for usage in the military, many of the innovations the agency funded were beyond the requirements for the US military. Some technologies that emerged from the backing of DARPA are computer networking and graphical user interfaces. DARPA works with academics and industry and report directly to senior DoD officials.

The Strategic Computing Initiative was founded in 1983, after the first AI winter in the 70s. The initiative supported projects that helped develop machine intelligence, from chip design to AI software. The DoD spent a total of 1 billion USD (not adjusted for inflation) before the program’s shutdown in 1993, even though there were several cuts in the late 80s. Although the initiative failed to reach its overarching goals, specific targets were still met.

This project was created in response to Japan’s Fifth Generation Computer program, funded by the Japanese Ministry of Trade and Industry in 1982. The goal of this program was to create computers with massively parallel computing and logic programming and to propel Japan to the top spots in advanced technology. This will then create a platform for future developments in AI. By the time of the program’s end, the opinion of it was mixed, divided between considering it a failure or ahead of its time.

Although the results of the SCI and other computer/AI projects in the 80s were mixed, they helped brought funding back to AI development after the first AI winter in the 70s. The History of AI marks the Strategic Computer Initiative as an important event in AI due to its revival of AI in the US. The SCI towards its ends also indicated a second AI winter.