Chronicles

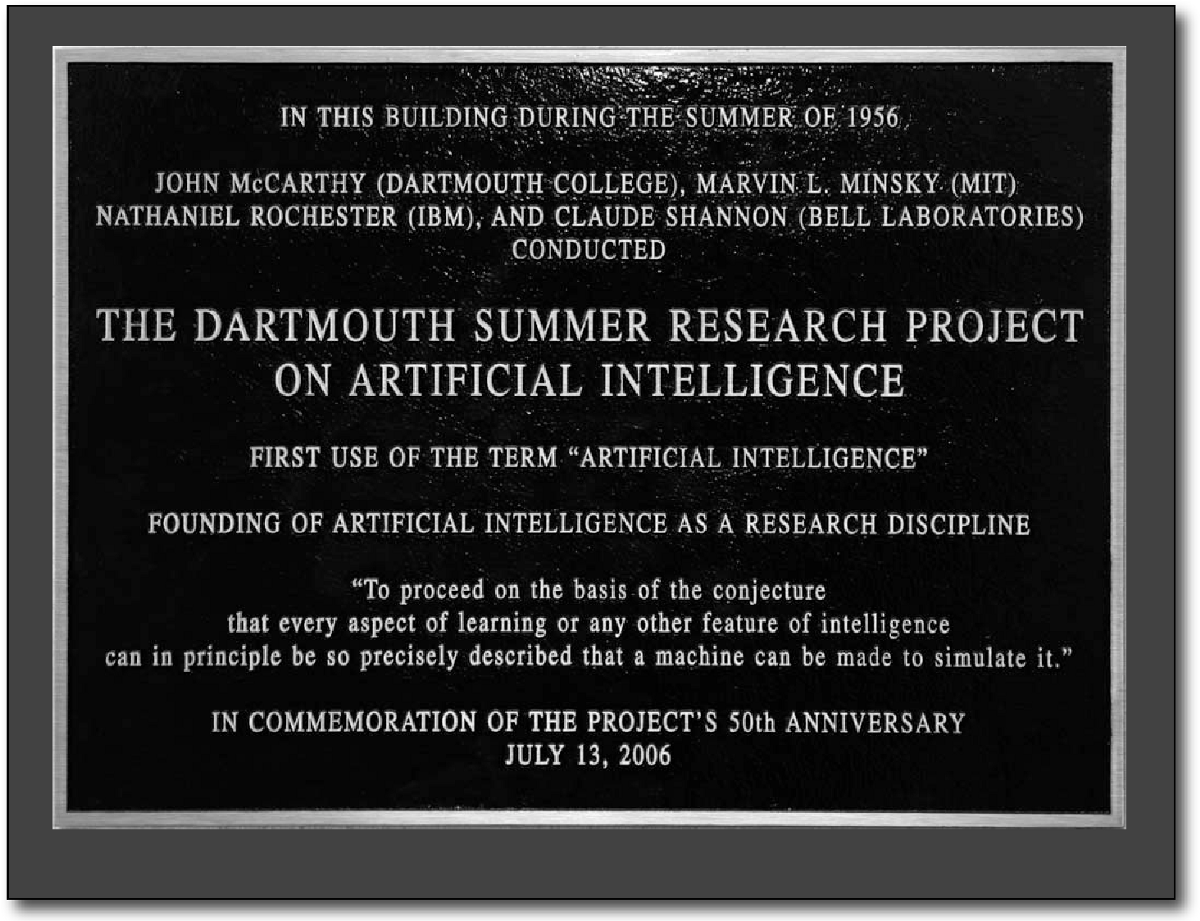

This week in The History of AI at AIWS.net – the Dartmouth Summer Research Project on Artificial Intelligence was proposed

This week in The History of AI at AIWS.net - the Dartmouth Summer Research Project on Artificial Intelligence was proposed. The proposal was submitted on September 2, 1955, but written on August 31, 1955. It was the collaboration of John McCarthy, Marvin Minsky,...

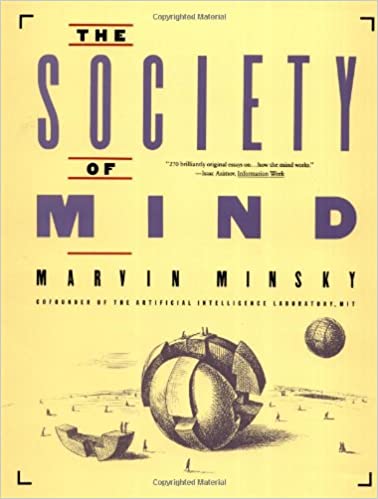

This week in The History of AI at AIWS.net – “The Society of Mind” was published by Marvin Minsky

This week in The History of AI at AIWS.net - Marvin Minsky publishes The Society of Mind in 1987. This book is a theoretical description of the mind as a collection of cooperating agents. Marvin Minsky was an American cognitive and computer scientist. He penned the...

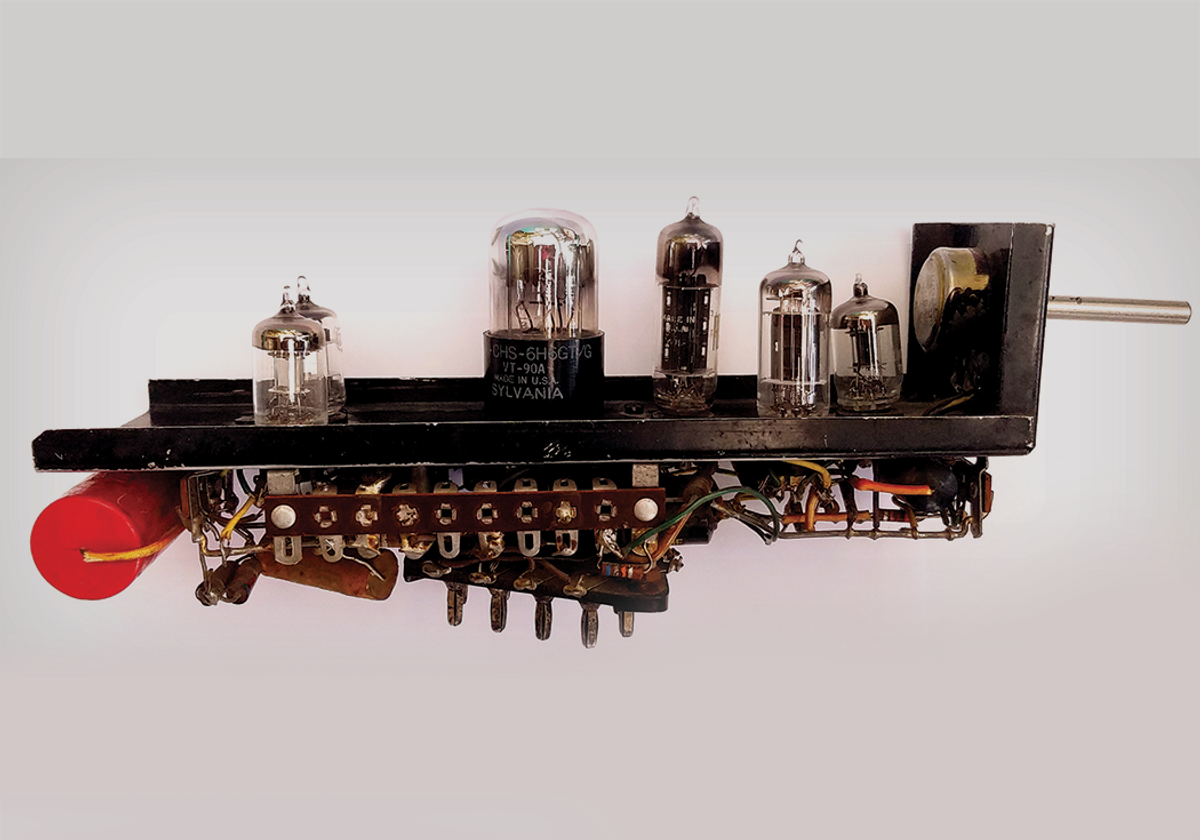

This week in The History of AI at AIWS.net – Marvin Minsky and Dean Edmonds built SNARC

This week in The History of AI at AIWS.net - Marvin Minsky and Dean Edmonds built SNARC, the first artificial neural network, in 1951. SNARC stands for the Stochastic Neural Analog Reinforcement Calculator. It is a neural net machine, which itself is a randomly...

This week in The History of AI at AIWS.net – the Dartmouth Conference ended on August 17th, 1956

This week in The History of AI at AIWS.net - the Dartmouth Conference ended on August 17th, 1956. This gathering lasted the entire summer at Dartmouth College in Hanover, New Hampshire, having started on 16th June. The Dartmouth Conference was originally dreamt up by...

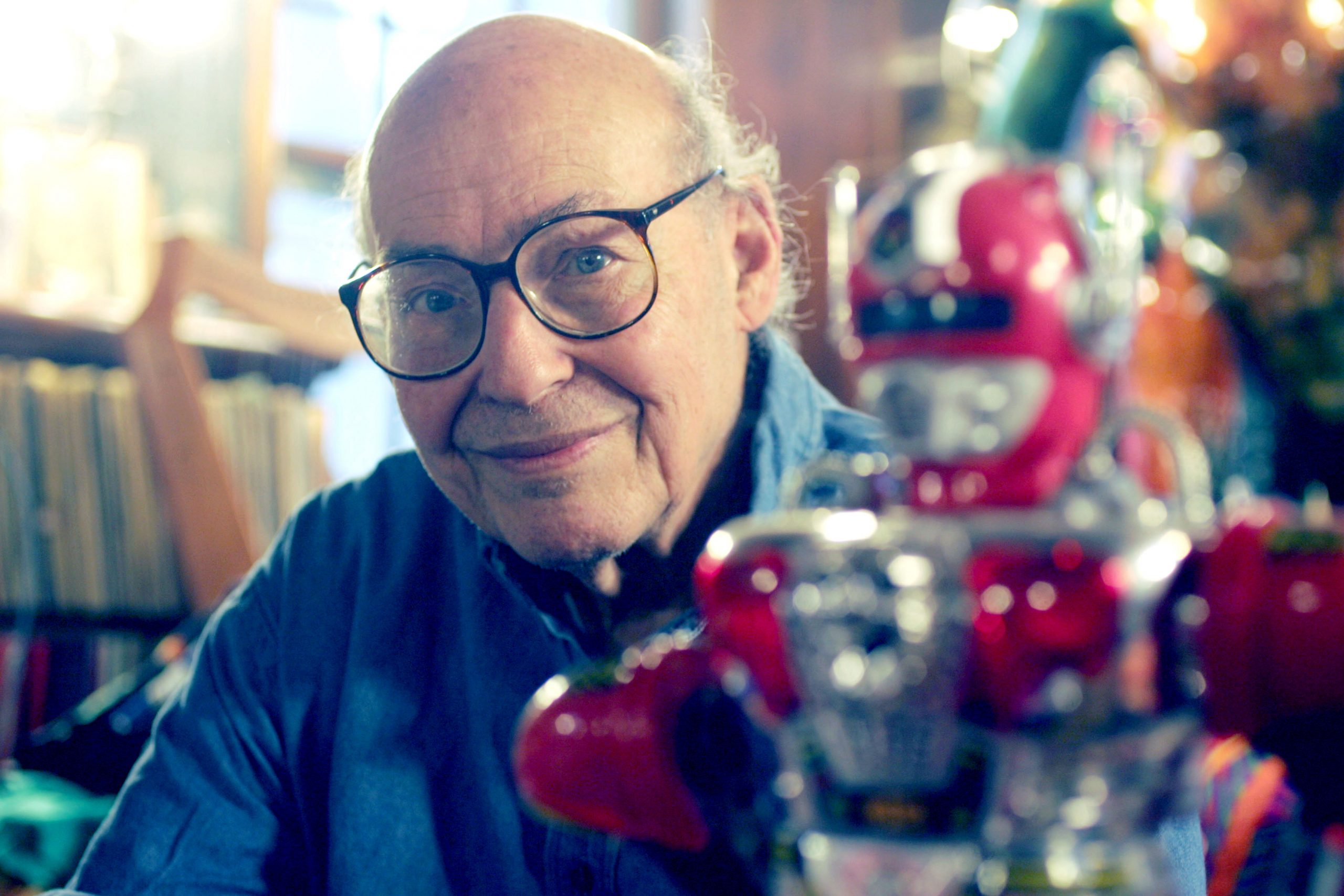

This week at the History of AI – Marvin Minsky was born on August 9th, 1927

This week at the History of AI - Marvin Minsky was born on August 9th, 1927. Minsky was one of the most influential AI scientists. Marvin Minksy was an important pioneer in the field of AI. He penned the research proposal for the Dartmouth Conference, which coined the...

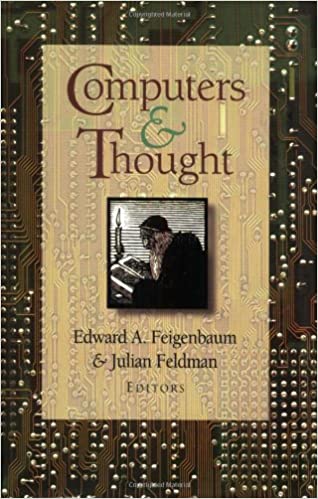

This week in The History of AI at AIWS.net – Edward Feigenbaum and Julian Feldman published “Computers and Thought”

This week in The History of AI at AIWS.net - Edward Feigenbaum and Julian Feldman published Computers and Thought, a book composed of articles on Artificial Intelligence, the first of its kind, in 1963. Feigenbaum and Feldman edited and wrote some of the articles but...

This week in The History of AI at AIWS.net – John McCarthy proposed the ‘advice taker’ in 1959

This week in The History of AI at AIWS.net – John McCarthy proposed the “advice taker” in his paper “Programs with Common Sense.” This hypothetical program was the first to use logic to represent information. The paper was published in 1959. John McCarthy was an...

This week in The History of AI at AIWS.net – Frank Rosenblatt developed the Perceptron

This week in The History of AI at AIWS.net - Frank Rosenblatt developed the Perceptron in 1957. It is a form of neural network that allowed pattern-recognition. Frank Rosenblatt was an American psychologist. Born in 1928, Rosenblatt would go on to study at Cornell...

This week in The History of AI at AIWS.net – Bletchley Park cryptologists broke the German Enigma code

This week in The History of AI at AIWS.net - Bletchley Park cryptologists broke the German Enigma code on 9th July, 1941. Alan Turing, considered the founder of computer science and AI, played a vital role in this process in developing the Bombe. Alan Turing was a...

This week in The History of AI at AIWS.net – The Democratic Alliance on Digital Governance Conference was hosted on July 1st, 2020

This week in The History of AI at AIWS.net - The Democratic Alliance on Digital Governance Conference was hosted on July 1st, 2020. The subheading of this event was “Protecting and Strengthening Democracy in the Aftermath of COVID-19.” This conference was a...

The Artificial Intelligence Chronicle

384 BC–322 BC Aristotle described the syllogism, a method of formal, mechanical thought and theory of knowledge, the first formal deductive reasoning system. in The Organon.

1st century Heron of Alexandria created mechanical men and other automatons. 260 Porphyry of Tyros wrote Isagogê which categorized knowledge and logic. ~800 Geber developed the Arabic alchemical theory of Takwin, the artificial creation of life in the laboratory, up to and including human life.

1206 Al-Jazari created a programmable orchestra of mechanical human beings.

Talking heads were said to have been created, Roger Bacon and Albert the Great reputedly among the owners.

In 1206 A.D., Al-Jazari, an Arab inventor, designed what is believed to be the first programmable humanoid robot, a boat carrying four mechanical musicians powered by water flow.

1275 Ramon Llull, Spanish theologian, invents the Ars Magna, a tool for combining concepts mechanically, based on an Arabic astrological tool, the Zairja. The method would be developed further by Gottfried Leibniz in the 17th century.

1308 Catalan poet and theologian Ramon Llull publishes Ars generalis ultima (The Ultimate General Art), further perfecting his method of using paper-based mechanical means to create new knowledge from combinations of concepts.

15th century

- Invention of printing using moveable type. Gutenberg Bible printed (1456).

15th-16th century

- Clocks, the first modern measuring machines, were first produced using lathes.

~1500 Paracelsus claimed to have created an artificial man out of magnetism, sperm and alchemy.

16th century

- Clockmakers extended their craft to creating mechanical animals and other novelties. For example, see DaVinci’s walking lion (1515).

~1580 Rabbi Judah Loew ben Bezalel of Prague is said to have invented the Golem, a clay man brought to life.

Early 1700s: Depictions of all-knowing machines akin to computers were more widely discussed in popular literature. Jonathan Swift’s novel “Gulliver’s Travels” mentioned a device called the engine, which is one of the earliest references to modern-day technology, specifically a computer. This device’s intended purpose was to improve knowledge and mechanical operations to a point where even the least talented person would seem to be skilled – all with the assistance and knowledge of a non-human mind (mimicking artificial intelligence.)

- Early in the century, Descartes proposed that bodies of animals are nothing more than complex machines. Many other 17th century thinkers offered variations and elaborations of Cartesian mechanism.

Early 17th century René Descartes proposed that bodies of animals are nothing more than complex machines (but that mental phenomena are of a different “substance”).

1620 Sir Francis Bacon developed empirical theory of knowledge and introduced inductive logic in his work The New Organon, a play on Aristotle‘s title The Organon.

1623 Wilhelm Schickard drew a calculating clock on a letter to Kepler. This will be the first of five unsuccessful attempts at designing a direct entry calculating clock in the 17th century (including the designs of Tito Burattini, Samuel Morland and René Grillet).

1641 Thomas Hobbes published Leviathan and presented a mechanical, combinatorial theory of cognition. He wrote “…for reason is nothing but reckoning”.

1642 Blaise Pascal invented the mechanical calculator, the first digital calculating machine.

- Thomas Hobbes published The Leviathan (1651), containing a mechanistic and combinatorial theory of thinking.

- Arithmetical machines devised by Sir Samuel Morland between 1662 and 1666

1666 Mathematician and philosopher Gottfried Leibniz

publishes Dissertatio de arte combinatoria (On the Combinatorial Art), following Ramon Llull in proposing an alphabet of human thought and arguing that all ideas are nothing but combinations of a relatively small number of simple concepts.

1672 Gottfried Leibniz improved the earlier machines, making the Stepped Reckoner to do multiplication and division. He also invented the binary numeral system and envisioned a universal calculus of reasoning (alphabet of human thought) by which arguments could be decided mechanically. Leibniz worked on assigning a specific number to each and every object in the world, as a prelude to an algebraic solution to all possible problems.

18th century

1726 Jonathan Swift publishes Gulliver’s Travels, which includes a description of the Engine, a machine on the island of Laputa (and a parody of Llull’s ideas): “a Project for improving speculative Knowledge by practical and mechanical Operations.” By using this “Contrivance,” “the most ignorant Person at a reasonable Charge, and with a little bodily Labour, may write Books in Philosophy, Poetry, Politicks, Law, Mathematicks, and Theology, with the least Assistance from Genius or study.” The machine is a parody of Ars Magna, one of the inspirations of Gottfried Leibniz‘ mechanism. (3)

1750 Julien Offray de La Mettrie published L’Homme Machine, which argued that human thought is strictly mechanical. (1)

1763 Thomas Bayes develops a framework for reasoning about the probability of events. Bayesian inference will become a leading approach in machine learning. (1)

1769 Wolfgang von Kempelen built and toured with his chess-playing automaton, The Turk.[24] The Turk was later shown to be a hoax, involving a human chess player. (3)

- The 18th century saw a profusion of mechanical toys, including the celebrated mechanical duck of Vaucanson and von Kempelen’s phony mechanical chess player, The Turk (1769). Edgar Allen Poe wrote (in the Southern Literary Messenger, April 1836) that the Turk could not be a machine because, if it were, it would not lose.

19th century

- Joseph-Marie Jacquard invented the Jacquard loom, the first programmable machine, with instructions on punched cards (1801). (2)

- Luddites (by Marjie Bloy, PhD. Victorian Web) (led by Ned Ludd) destroyed machinery in England (1811-1816). See also What the Luddites Really Fought Against. By Richard Conniff, Smithsonian magazine (March 2011). (3)

- Mary Shelley published the story of Frankenstein’s monster (1818). The book Frankenstein, or the Modern Prometheus available from Project Gutenberg. (1)

- Charles Babbage & Ada Byron (Lady Lovelace) designed a programmable mechanical calculating machines, the Analytical Engine (1832). A working model was built in 2002; a short video shows it working.

1837 The mathematician Bernard Bolzano made the first modern attempt to formalize semantics. (1)

1854 George Boole argues that logical reasoning could be performed systematically in the same manner as solving a system of equations. George Boole developed a binary algebra representing (some) “laws of thought,” published in The Laws of Thought (1854). (1)

1863 Samuel Butler suggested that Darwinian evolution also applies to machines, and speculates that they will one day become conscious and eventually supplant humanity. (1)

- 1872: Author Samuel Butler’s novel “Erewhon” toyed with the idea that at an indeterminate point in the future machines would have the potential to possess consciousness. (1)

- Modern propositional logic developed by Gottlob Frege in his 1879 work Begriffsschrift and later clarified and expanded by Russell,Tarski, Godel, Church and others. (1)

- 1898 At an electrical exhibition in the recently completed Madison Square Garden, Nikola Tesla makes a demonstration of the world’s first radio-controlled vessel. The boat was equipped with, as Tesla described, “a borrowed mind.” (3)

AI from 1900-1950

Once the 1900s hit, the pace with which innovation in artificial intelligence grew was significant.

- Bertrand Russell and Alfred North Whitehead published Principia Mathematica, which revolutionaized formal logic. Russell, Ludwig Wittgenstein, and Rudolf Carnap lead philosophy into logical analysis of knowledge. (1)

- 1914 The Spanish engineer Leonardo Torres y Quevedo demonstrates the first chess-playing machine, capable of king and rook against king endgames without any human intervention, his chess machine ‘Ajedrecista’, using electromagnets under the board to play the endgame rook and king against the lone king, possibly the first computer game (1912). (3)

1921: Karel Čapek, a Czech playwright, released his science fiction play “Rossum’s Universal Robots” (English translation). His play explored the concept of factory-made artificial people who he called robots – the first known reference to the word. From this point onward, people took the “robot” idea and implemented it into their research, art, and discoveries. (2)

1925 Houdina Radio Control releases a radio-controlled driverless car, travelling the streets of New York City. (3)

1927: The sci-fi film Metropolis, directed by Fritz Lang, featured a robotic girl who was physically indistinguishable from the human counterpart from which it took its likeness. The artificially intelligent robot-girl then attacks the town, wreaking havoc on a futuristic Berlin. It features a robot double of a peasant girl, Maria, which unleashes chaos in Berlin of 2026—it was the first robot depicted on film, inspiring the Art Deco look of C-3PO in Star Wars. This film holds significance because it is the first on-screen depiction of a robot and thus lent inspiration to other famous non-human characters. (2)

1929: Japanese biologist and professor Makoto Nishimura created Gakutensoku, the first robot to be built in Japan. Gakutensoku translates to “learning from the laws of nature,” implying the robot’s artificially intelligent mind could derive knowledge from people and nature. Some of its features included moving its head and hands as well as changing its facial expressions and move its head and hands via an air pressure mechanism. (3)

1920s and 1930s Ludwig Wittgenstein and Rudolf Carnap led philosophy into logical analysis of knowledge. Alonzo Church developde Lambda Calculus to investigate computability using recursive functional notation.(1)

1931 Kurt Gödel showed that sufficiently powerful formal systems, if consistent, permit the formulation of true theorems that are unprovable by any theorem-proving machine deriving all possible theorems from the axioms. To do this he had to build a universal, integer-based programming language, which is the reason why he is sometimes called the “father of theoretical computer science“. (1)

Alan Turing proposed the universal Turing machine (1936-37)

1939: John Vincent Atanasoff (physicist and inventor), alongside his graduate student assistant Clifford Berry, created the Atanasoff-Berry Computer (ABC) with

a grant of $650 at Iowa State University. The ABC weighed over 700 pounds and could solve up to 29 simultaneous linear equations.

1939 Electro, a mechanical man, introduced by Westinghouse Electricat the World’s Fair in New York (1939), along with Sparko, a mechanical dog. Built by Westinghouse, the relay-based Elektro robot responds to the rhythm of voice commands and delivers wisecracks pre-recorded on 78 rpm records. It appeared at the World’s Fair, and it could move its head and arms… and even “smoked” cigarettes.

1940 Edward Condon displays Nimatron, a digital computer that played Nim perfectly. (3) 1941 Konrad Zuse built the first working program-controlled computers. (3)

1941 The Three Laws of Robotics: Isaac Asimov publishes the science fiction short story Liar! in the May issue of Astounding Science Fiction. In it, he introduced the Three Laws of Robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

This is thought to be the first known use of the term “robotics.” (1,2)

1943 Warren S. McCulloch and Walter Pitts publish “A Logical Calculus of the Ideas Immanent in Nervous Activity” in the Bulletin of Mathematical Biophysics. This influential paper, in which they discussed networks of idealized and simplified artificial “neurons” and how they might perform simple logical functions, will become the inspiration for computer-based “neural networks” (and later “deep learning”) and their popular description as “mimicking the brain.”

Two scientists, Warren S. McCulloch and Walter H. Pitts, publish the groundbreaking paper A Logical Calculus of the Ideas Immanent in Nervous Activity. The paper quickly became a foundational work in the study of artificial neural networks and has many applications in artificial intelligence research. In it McCulloch and Pitts described a simplified neural network architecture for intelligence, and while the neurons they described were greatly simplified compared to biological neurons, the model they proposed was enhanced and improved upon by subsequent generations of researchers. (1)

Emil Post proves that production systems are a general computational mechanism (1943). See Ch.2 of Rule Based Expert Systems for the uses of production systems in AI. Post also did important work on completeness, inconsistency, and proof theory. (1)

1945 Game theory which would prove invaluable in the progress of AI was introduced with the 1944 paper, Theory of Games and Economic Behavior by mathematician John von

Neumann and economist Oskar Morgenstern. (1)

1945 Vannevar Bush published As We May Think (The Atlantic Monthly, July 1945) a prescient vision of the future in which computers assist humans in many activities. (1)

- Arturo Rosenblueth, Norbert Wiener & Julian Bigelow coin the term “cybernetics” in a 1943 paper. Wiener’s popular book by that name published in 1948. (1)

- George Polya published his best-selling book on thinking heuristically, How to Solve It in 1945. This book introduced the term ‘heuristic’ into modern thinking and has influenced many AI scientists. (1)

- Vannevar Bush published As We May Think (Atlantic Monthly, July 1945) a prescient vision of the future in which computers assist humans in many activities. (1)

- Grey Walter experimented with autonomous robots, turtles named Elsie and Elmer, at Bristol (1948-49) based on the premise that a small number of brain cells could give rise to complex behaviors. (3)

- A.M. Turing published “Computing Machinery and Intelligence” (1950). – Introduction of Turing Test as a way of operationalizing a test of intelligent behavior. See The Turing Institute for more on Turing. (3)

1948 Cybernetics: Norbert Wiener publishes the book Cybernetics, which has a major influence on research into artificial intelligence and control systems. Wiener drew on his World War II experiments with anti-aircraft systems that anticipated the course of enemy planes by interpreting radar images. Wiener coined the term “cybernetics” from the Greek word for “steersman.” (1)

1948 John von Neumann (quoted by E.T. Jaynes) in response to a comment at a lecture that it was impossible for a machine to think: “You insist that there is something a machine cannot do. If you will tell me precisely what it is that a machine cannot do, then I can always make a machine which will do just

that!”. Von Neumann was presumably alluding to the Church-Turing thesis which states that any effective procedure can be simulated by a (generalized) computer. (1)

1949 Alan Turing quoted by The London Times on artificial intelligence: On June 11, The London Times quotes the mathematician Alan Turing. “I do not see why it (the machine) should not enter any one of the fields normally covered by the human intellect, and eventually compete on equal terms. I do not think you even draw the line about sonnets, though the comparison is perhaps a little bit unfair because a sonnet written by a machine will be better appreciated by another machine.” (1)

1949: Computer scientist Edmund Berkeley’s book “Giant Brains: Or Machines That Think” in which he writes: “Recently there have been a good deal of news about strange giant machines that can handle information with vast speed and skill…. He went on to compare machines to a human brain if it were made of “hardware and wire instead of flesh and nerves,” describing machine ability to that of the human mind, stating that “a machine, therefore, can think.” (1)

1949 Donald Hebb publishes Organization of Behavior: A Neuropsychological Theory in which he proposes a theory about learning based on conjectures regarding neural networks and the ability of synapses to strengthen or weaken over time. Claude Shannon published detailed analysis of chess playing as search in “Programming a computer to play chess” (1950). (1)

AI in the 1950s

The 1950s proved to be a time when many advances in the field of artificial intelligence came to fruition with an upswing in research-based findings in AI by various computer scientists among others. The modern history of AI begins with the development of stored-program electronic computers. For a short summary, see Genius and Tragedy at Dawn of Computer Age By ALICE RAWSTHORN, NY Times (March 25, 2012 ), a review of technology historian George Dyson’s book “Turing’s Cathedral: The Origins of the Digital Universe.”

1950 Grey Walter’s Elsie: A neurophysiologist, Walter built wheeled automatons in order to experiment with goal-seeking behavior. His best known robot, Elsie, used photoelectric cells to seek moderate light while avoiding both strong light and darkness— which made it peculiarly attracted to women’s stockings. (2,3)

1950 Isaac Asimov published his three laws of robotics (1950). (1)

1950 Issac Asimov’s, Robot: Isaac Asimov’s I, Robot is published. Perhaps in reaction to earlier dangerous fictional robots, Asimov’s creations must obey the “Three Laws of Robotics” (1941) to assure they are no threat to humans or each other. The book consisted of nine science fiction short stories. (1)

1950 Brain surgeon reflects on artificial intelligence: On June 9, at Manchester University’s Lister Oration, British brain surgeon Geoffrey Jefferson states, “Not until a machine can write a sonnet

or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain – that is, not only write it but know that it had written it. No mechanism could feel (and not merely artificially signal, an easy contrivance) pleasure at its successes, grief when its valves fuse, be warmed by flattery, be made miserable by its mistakes, be charmed by sex, be angry or miserable when it cannot get what it wants.” (1)

1950: Claude Shannon, “the father of information theory,” published “Programming a Computer for Playing Chess,” which was the first article to discuss the development of a chess-playing computer program. (1)

1950: Alan Turing published “Computing Machinery and Intelligence,” which proposed the idea of The Imitation Game – a question that considered if

machines can think. This proposal later became The Turing Test, which measured machine (artificial) intelligence. Turing’s development tested a machine’s ability to think as a human would. The Turing Test became an important component in the philosophy of artificial intelligence, which discusses intelligence, consciousness, and ability in machines. (1,2)

1951 The first working AI programs were written in 1951 to run on the Ferranti Mark 1 machine of the University of Manchester: a checkers-playing program written by Christopher Strachey and a chess playing program written by Dietrich Prinz. (3)

1951 Squee: the robot squirrel: Squee: The Robot Squirrel uses two light sensors and two contact switches to hunt for ”nuts” (actually, tennis balls) and drag them to its nest. Squee was described as “75% reliable,” but it worked well only in a very dark room. Squee was conceived by computer pioneer Edmund Berkeley, who earlier wrote the hugely popular book Giant Brains or Machines That

Think (1949). The original Squee prototype is in the permanent collection of the Computer History Museum. (1,2)

1951 Marvin Minsky and Dean Edmunds build SNARC (Stochastic Neural Analog Reinforcement Calculator), the first artificial neural network, using 3000 vacuum tubes to simulate a network of 40 neurons. (3)

1952 Arthur Samuel (IBM) wrote the first game-playing program,[35] for checkers (draughts), to achieve sufficient skill to challenge a respectable amateur. His first checkers-playing program was written in 1952, and in 1955 he created a version that learned to play. (3)

August 31, 1955 The term “artificial intelligence” is coined in a proposal for a “2 month, 10 man study of artificial intelligence” submitted by John McCarthy (Dartmouth College), Marvin Minsky (Harvard University), Nathaniel Rochester (IBM), and Claude Shannon (Bell Telephone Laboratories). The workshop, which

took place a year later, in July and August 1956, is generally considered as the official birthdate of the new field. (1,2)

1952: Arthur Samuel, a computer scientist, developed a checkers-playing computer program – the first to independently learn how to play a game. (3)

1955: John McCarthy and a team of men created a proposal for a workshop on “artificial intelligence.” In 1956 when the workshop took place, the official birth of the word was attributed to McCarthy. (3)

December 1955 Herbert Simon and Allen Newell develop the Logic Theorist, the first artificial intelligence program, which eventually would prove 38 of the first 52 theorems in Whitehead and Russell’s Principia Mathematica. Allen Newell, Herbert A. Simon and J.C. Shaw begin work on Logic Theorist, a program that would eventually prove 38 theorems from Whitehead and Russell’s Principia Mathematica. Logic Theorist introduced several critical concepts to artificial intelligence including heuristics, list processing and ‘reasoning as search.’ (1)

1955 The Turing test: Alan Turing creates a standard test to answer: “Can machines think?” He proposed that if a computer, on the basis of written replies to questions, could not be distinguished from a human respondent, then it must be “thinking”. (1)

1956 Robby the Robot: Robby the Robot appears in MGM’s 1956 science fiction movie Forbidden Planet. In the film, Robby was the creation of Dr. Mobius and was built to specifications found in an

alien computer system. Robby’s duties included assisting the human crew while following Isaac Asimov’s Three Laws of Robotics (1941). The movie was a cult hit, in part because of Robby’s

humorous personality and Robby the Robot toys became huge sellers. (3)

1956

The Dartmouth College summer AI conference is organized by John McCarthy, Marvin Minsky, Nathan Rochester of IBM and Claude Shannon. McCarthy coins the term artificial intelligence for the conference. (1)

- The first demonstration of the Logic Theorist (LT) written by Allen Newell, J.C. Shaw and Herbert A. Simon (Carnegie Institute of Technology, now Carnegie Mellon University or CMU). This is often called the first AI program, though Samuel’s checkers program also has a strong claim. See Over the holidays 50 years ago, two scientists hatched artificial intelligence. (2)

1957 Frank Rosenblatt develops the Perceptron, an early artificial neural network enabling pattern recognition based on a two-layer computer learning network. The New York Times reported the Perceptron to be “the embryo of an electronic computer that [the Navy] expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” The New Yorker called it a “remarkable machine… capable of what amounts to thought.” (2)

1957 The General Problem Solver (GPS) demonstrated by Newell, Shaw & Simon. (1)

1958 John McCarthy (Massachusetts Institute of Technology or MIT) develops programming language Lisp which becomes the most popular programming language used in artificial intelligence research. The programming language LISP (short for “List Processing”) is invented in 1958 by John McCarthy at MIT. A key feature of LISP was that data and programs were simply lists in parentheses, allowing a program to treat another program – or itself – as data. This characteristic greatly eased the kind of programming that attempted to model human thought. LISP is still used in a large number of artificial intelligence applications.(1)

1958

Herbert Gelernter and Nathan Rochester (IBM) described a theorem prover in geometry that exploits a semantic model of the domain in the form of diagrams of “typical” cases. (1)

Teddington Conference on the Mechanization of Thought Processes was held in the UK and among the papers presented were John McCarthy’s Programs with Common Sense, Oliver Selfridge‘s Pandemonium, and Marvin Minsky‘s Some Methods of Heuristic Programming and Artificial Intelligence. (1)

1959 The General Problem Solver (GPS) was created by Newell, Shaw and Simon while at CMU. (1) 1959 John McCarthy and Marvin Minsky founded the MIT AI Lab. (2)

1959 Arthur Samuel coins the term “machine learning,” reporting on programming a computer “so that it will learn to play a better game of checkers than can be played by the person who wrote the program.” (1)

1959 Oliver Selfridge publishes “Pandemonium: A paradigm for learning” in the Proceedings of the Symposium on Mechanization of Thought

Processes, in which he describes a model for a process by which computers could recognize patterns that have not been specified in advance. (1,2)

1959 John McCarthy publishes “Programs with Common Sense” in the Proceedings of the Symposium on Mechanization of Thought Processes, in which he describes the Advice Taker, a program for solving problems by manipulating sentences in formal languages with the ultimate objective of making programs “that learn from their experience as effectively as humans do.” (1)

1959 Automatically Programmed Tools (APT): MIT´s

Servomechanisms Laboratory demonstrates computer assisted manufacturing (CAM). The school´s Automatically Programmed Tools project created a language, APT, used to control milling machine operations. At the demonstration, an air force general claimed that the new technology would enable the United States to “build a war machine that nobody would want to tackle.” The machine produced a commemorative ashtray for each attendee. (3)

1952-62

- Arthur Samuel (IBM) wrote the first game-playing program, for checkers, to achieve sufficient skill to challenge a world champion. Samuel’s machine learning programs were responsible for the high performance of the checkers player. (3)

AI in the 1960s

Innovation in the field of artificial intelligence grew rapidly through the 1960s. The creation of new programming languages, robots and automatons, research studies, and films that depicted artificially intelligent beings increased in popularity. This heavily highlighted the importance of AI in the second half of the 20th century.

Late 50’s & Early 60’s

- Margaret Masterman & colleagues at Cambridge design semantic nets for machine translation. See Themes in the work of Margaret Masterman by Yorick Wilks (1988). (2)

- Ray Solomonoff lays the foundations of a mathematical theory of AI, introducing universal Bayesian methods for inductive inference and prediction. (1)

- Man-Computer Symbiosis by J.C.R. Licklider. (2)

1960 Quicksort Algorithm: While studying machine translation of languages in Moscow, C. A. R. Hoare develops Quicksort, an algorithm that would become one of the most used sorting methods in the world. Later, Hoare went to work for the British computer company Elliott Brothers, where he designed the first commercial Algol 60 compiler. Queen Elizabeth II knighted C.A.R. Hoare in 2000. (1)

1961 James Slagle (PhD dissertation, MIT) wrote (in Lisp) the first symbolic integration program, SAINT, which solved calculus problems at the college freshman level. (1)

1961 In Minds, Machines and Gödel, John Lucas[38] denied the possibility of machine intelligence on logical or philosophical grounds. He referred to Kurt Gödel‘s result of 1931: sufficiently powerful formal systems are either inconsistent or allow for formulating true theorems unprovable by any theorem-

proving AI deriving all provable theorems from the axioms. Since humans are able to “see” the truth of such theorems, machines were deemed inferior. (1)

1961: Unimate, an industrial robot invented by George Devol in the 1950s, became the first to work on a General Motors assembly line in New Jersey. Its responsibilities included transporting die castings from the assembly line and welding the parts on to cars – a task deemed dangerous for humans. UNIMATE, the first mass-produced industrial robot, begins work at General Motors. Obeying step-by-step commands stored on a magnetic drum, the 4,000-pound robot arm sequenced and stacked hot pieces of die-cast metal. UNIMATE was the brainchild of Joe Engelberger and George Devol, and originally automated the manufacture of TV picture tubes. (3)

1961 James Slagle develops SAINT (Symbolic Automatic INTegrator), a heuristic program that solved symbolic integration problems in freshman calculus. (1)

1961 In Minds, Machines and Gödel, John Lucas[38] denied the possibility of machine intelligence on logical or philosophical grounds. He referred to Kurt Gödel‘s result of 1931: sufficiently powerful formal systems are either inconsistent or allow for formulating true theorems unprovable by any theorem proving AI deriving all provable theorems from the axioms. Since humans are able to “see” the truth of such theorems, machines were deemed inferior. (1)

1962

- First industrial robot company, Unimation, founded.

1963 The Ranchor Arm: Researchers design the Rancho Arm robot at Rancho Los Amigos Hospital in Downey, California as a tool for the handicapped. The Rancho Arm´s six joints gave it the flexibility of a human arm. Acquired by Stanford University in 1963, it holds a place among the first artificial robotic arms to be controlled by a computer. (3)

1963

- Thomas Evans’ program, ANALOGY, written as part of his PhD work at MIT, demonstrated that computers can solve the same analogy problems as are given on IQ tests. (1)

- Edward Feigenbaum and Julian Feldman published Computers and Thought, the first collection of articles about artificial intelligence.(1)

- Leonard Uhr and Charles Vossler published “A Pattern Recognition Program That Generates, Evaluates, and Adjusts Its Own Operators”, which described one of the first machine learning programs that could adaptively acquire and modify features and thereby overcome the limitations of simple perceptrons of Rosenblatt. (1)

- Ivan Sutherland’s MIT dissertation on Sketchpad introduced the idea of interactive graphics into computing. (1)

- Edward A. Feigenbaum & Julian Feldman published Computers and Thought, the first collection of articles about artificial intelligence. (1).

1964

Bertram Raphael‘s MIT dissertation on the SIR program demonstrates the power of a logical representation of knowledge for question-answering systems. (1)

1964 Daniel Bobrow completes his MIT PhD dissertation titled “Natural Language Input for a Computer Problem Solving System” and develops STUDENT, a natural language understanding computer program. (1)

1965 Lotfi Zadeh at U.C. Berkeley publishes his first paper introducing fuzzy logic “Fuzzy Sets” (Information and Control 8: 338–353). (1)

1965 J. Alan Robinson invented a mechanical proof procedure, the Resolution Method, which allowed programs to work efficiently with formal logic as a representation language. (See Carl Hewitt’s downloadable PDF file Middle History of Logic Programming). (2)

1965 Joseph Weizenbaum (MIT) built ELIZA, an interactive program that carries on a dialogue in English language on any topic. It was a popular toy at AI centers on the ARPANET when a version that “simulated” the dialogue of a psychotherapist was programmed. (3)

1965 Edward Feigenbaum initiated Dendral, a ten-year effort to develop software to deduce the molecular structure of organic compounds using scientific instrument data. It was the first expert system. (3)

1965 DENDRAL artificial program: A Stanford team led by professors Ed Feigenbaum, Joshua Lederberg and Carl Djerassi creates DENDRAL, the first “expert system.” DENDRAL was an artificial intelligence program designed to apply the accumulated expertise of specialists to problem solving. Its area of specialization was chemistry and physics. It applied a battery of “if-then” rules to identify the molecular structure of organic compounds, in some cases more accurately than experts. (1,2)

1965 Herbert Simon predicts that “machines will be capable, within twenty years, of doing any work a man can do.” (1)

1965 Hubert Dreyfus publishes “Alchemy and AI,” arguing that the mind is not like a computer and that there were limits beyond which AI would not progress. (1)

1965 I.J. Good writes in “Speculations Concerning the First Ultraintelligent Machine” that “the first ultraintelligent machine is the last

invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.” (1)

1965 Edward Feigenbaum, Bruce G. Buchanan, Joshua Lederberg, and Carl Djerassi start working on DENDRAL at Stanford University. The first expert system, it automated the decision-making process and problem solving behavior of organic chemists, with the general aim of studying hypothesis formation and constructing models of empirical induction in science. Dendral program (Edward Feigenbaum, Joshua Lederberg, Bruce Buchanan, Georgia Sutherland at Stanford University) demonstrated to interpret mass spectra on organic chemical compounds. First successful knowledge-based program for scientific reasoning. (1,2)

1965: Joseph Weizenbaum, computer scientist and professor, developed ELIZA, an interactive computer program that could functionally converse in English with a person. It was a popular toy at AI centers on the ARPA-net when a version that “simulated” the dialogue of a psychotherapist was programmed. Weizenbaum’s goal was to demonstrate how communication between an artificially intelligent mind versus a human mind was “superficial,” but discovered many people attributed anthropomorphic characteristics to ELIZA. ELIZA, an interactive program that carries on a dialogue in English language on any topic. Weizenbaum, who wanted to demonstrate the superficiality of communication between man and machine, was surprised by the number of people who attributed human-like feelings to the computer program. Joseph

1966

- Ross Quillian (PhD dissertation, Carnegie Inst. of Technology, now CMU)

demonstrated semantic nets. (1)

- Machine Intelligence[39] workshop at Edinburgh – the first of an influential annual series organized by Donald Michie and others. (2)

- Negative report on machine translation kills much work in Natural language processing (NLP) for many years. (3)

1966 Joseph Weizenbaum’s ELIZA : Joseph Weizenbaum finishes ELIZA. ELIZA is a natural language processing environment. Its most famous mode was called DOCTOR, which responded to user questions much like a psychotherapist. DOCTOR was able to trick

some users into believing they were interacting with another human, at least until the program reached its limitations and became nonsensical. DOCTOR used predetermined phrases or questions and would substitute key words to mimic a human actually listening to user queries or statements. (3)

1966 The ORM: Developed at Stanford University, the Orm robot (Norwegian for “snake”) was an unusual air-powered robotic arm. It moved by inflating one or more of its 28 rubber bladders that were sandwiched between seven metal disks. The design was abandoned because movements could not be repeated accurately.

1966 Shakey the robot is the first general-purpose mobile robot to be able to reason about its own actions. In a Life magazine 1970 article about this “first electronic person,” Marvin Minsky is quoted saying with “certitude”: “In from three to eight years we will have a machine with the general intelligence of an average human being.”. Shakey the Robot, developed by Charles Rosen with the help of 11 others, was the first general-purpose mobile robot, also known as the “first electronic person.” (3)

Late 60s

- Doug Engelbart invented the mouse at SRI.

1968

Joel Moses (PhD work at MIT) demonstrated the power of symbolic reasoning for integration problems in the Macsyma program. First successful knowledge-based program in mathematics. (2)

Richard Greenblatt (programmer) at MIT built a knowledge-based chess-playing program, MacHack, that was good enough to achieve a class-C rating in tournament play. (3)

Wallace and Boulton’s program, Snob (Comp.J. 11(2) 1968), for unsupervised classification (clustering) uses the Bayesian Minimum Message Length criterion, a mathematical realisation of Occam’s razor. (3)

Marvin Minsky & Seymour Papert publish Perceptrons, demonstrating limits of simple neural nets. (1)

1968: The sci-fi film 2001: A Space Odyssey (2001: Space Odyssey), directed by Stanley Kubrick, is released. It features HAL (Heuristically programmed ALgorithmic computer), a sentient computer. HAL controls the spacecraft’s systems and interacts with the ship’s crew, conversing with them as if HAL were human until a malfunction changes HAL’s interactions in a negative manner. (2)

1968 SHRDLU natural language: Terry Winograd begins work on his PhD thesis at MIT. His thesis focused on SHRDLU, a natural language used in artificial intelligence research. While precursor programs like ELIZA were incapable of truly understanding English commands and responding appropriately, SHRDLU was able to combine syntax, meaning and deductive reasoning to accomplish this. SHRDLU’s universe was also very simple, and commands consisted of picking up and moving blocks, cones and pyramids of various shapes and colors. Terry Winograd develops SHRDLU, an early natural language understanding computer program. (1)

1969

Stanford Research Institute (SRI): Shakey the Robot, demonstrated combining animal locomotion, perception and problem solving. (3)

Roger Schank (Stanford) defined conceptual dependency model for natural language understanding. Later developed (in PhD dissertations at Yale University) for use in story understanding by Robert Wilensky and Wendy Lehnert, and for use in understanding memory by Janet Kolodner. (1)

Yorick Wilks (Stanford) developed the semantic coherence view of language called Preference Semantics, embodied in the first semantics-driven machine translation program, and the basis of many PhD dissertations since such as Bran Boguraev and David Carter at Cambridge. (1)

First International Joint Conference on Artificial Intelligence (IJCAI) held at Stanford. (2)

Marvin Minsky and Seymour Papert publish Perceptrons, demonstrating previously unrecognized limits of this feed-forward two-layered structure, and This book is considered by some to mark the beginning of the AI winter of the 1970s, a failure of confidence and funding for AI. Nevertheless, significant progress in the field continued (see below). (1)

McCarthy and Hayes started the discussion about the frame problem with their essay, “Some Philosophical Problems from the Standpoint of Artificial Intelligence”. (1)

1969 Arthur Bryson and Yu-Chi Ho

describe backpropagation as a multi-stage dynamic system optimization method. A learning algorithm for multi-layer artificial neural networks, it has contributed significantly to the success of deep learning in the 2000s and 2010s, once computing power has sufficiently advanced to accommodate the training of large networks. (1,2)

1969 Marvin Minsky and Seymour Papert publish Perceptrons: An Introduction to Computational Geometry, highlighting the limitations of simple neural networks. In an expanded edition published in 1988, they responded to claims that their 1969 conclusions significantly reduced funding for neural network research: “Our version is that progress had already come to a virtual halt because of the lack of adequate basic theories… by the mid-1960s there had been a great many experiments with perceptrons, but no one had been able to explain why they were able to recognize certain kinds of patterns and not others.” (1)

1969 Victor Scheinmen’s Stanford arm: Victor Scheinman´s Stanford Arm robot makes a breakthrough as the first successful electrically powered, computer-controlled robot arm. By 1974, the Stanford Arm could assemble a Ford Model T water pump, guiding itself with optical and contact sensors. The Stanford Arm led directly to commercial production. Scheinman then designed the PUMA series

of industrial robots for Unimation, robots used for automobile assembly and other industrial tasks. (3)

1969 The Tentacle arm: Marvin Minsky develops the Tentacle Arm robot, which moves like an octopus. It has twelve joints designed to reach around obstacles. A DEC PDP-6 computer controls the arm, powered by hydraulic fluids. Mounted on a wall, it could lift the weight of a person. (3)

AI in the 1970s

Like the 1960s, the 1970s gave way to accelerated advancements, particularly focusing on robots and automatons. However, artificial intelligence in the 1970s faced challenges, such as reduced government support for AI research.

Early 70’s

Jane Robinson and Don Walker established an influential Natural Language Processing group at SRI. (3)

Seppo Linnainmaa publishes the reverse mode of automatic differentiation. This method became later known as backpropagation, and is heavily used to train artificial neural networks. (1)

Jaime Carbonell (Sr.) developed SCHOLAR, an interactive program for computer assisted instruction based on semantic nets as the representation of knowledge. (3)

Bill Woods described Augmented Transition Networks (ATN’s) as a representation for natural language understanding. (1)

Patrick Winston‘s PhD program, ARCH, at MIT learned concepts from examples in the world of children’s blocks. (3)

1970 Shakey the robot: SRI International´s Shakey robot becomes the first mobile robot controlled by artificial intelligence. Equipped with sensing devices and driven by a problem-solving program called STRIPS, the robot found its way around the halls of SRI by applying information about its environment to a route. Shakey used a TV camera, laser range finder, and bump sensors to collect data, which it then transmitted to a DEC PDP-10 and PDP-15. The computer sent commands to Shakey over a radio link. Shakey could move at a speed of 2 meters per hour. (3)

1970 The first anthropomorphic robot, the WABOT-1, is built at Waseda University in Japan. It consisted of a limb-control system, a vision system and a conversation system. (3)

1971 Terry Winograd‘s PhD thesis (MIT) demonstrated the ability of computers to understand English sentences in a restricted world of children’s blocks, in a coupling of his language understanding program, SHRDLU, with a robot arm that carried out instructions typed in English. (3)

1971 Work on the Boyer-Moore theorem prover started in Edinburgh.[40] (1)

1972 Prolog programming language developed by Alain Colmerauer. (1)

1972 Earl Sacerdoti developed one of the first hierarchical planning programs, ABSTRIPS.(1)

1972 LUNAR natural language information retrieval system: LUNAR, a natural language information retrieval system is completed by William Woods, Ronal Kaplan and Bonnie Nash-Webber at Bolt,

Beranek and Newman (BBN). LUNAR helped geologists access, compare and evaluate chemical-analysis data on moon rock and soil composition from the Apollo 11 mission. Woods was the manager of the BBN AI Department throughout the 1970s and into the early 1980s. (3)

1972 MYCIN, an early expert system for identifying bacteria causing severe infections and recommending antibiotics, is developed at Stanford University. (1)

1973 James Lighthill reports to the British Science Research Council on the state artificial intelligence research, concluding that “in no part of the field have discoveries made so far produced the major impact that was then promised,” leading to drastically reduced government support for AI research. (3)

1973 The Assembly Robotics Group at University of Edinburgh builds Freddy Robot, capable of using visual perception to locate and assemble models. (See Edinburgh Freddy Assembly Robot: a versatile computer-controlled assembly system.) (3)

1973: James Lighthill, applied mathematician, reported the state of artificial intelligence research to the British Science Council, stating: “in no part of the field have discoveries made so far produced the major impact that was then promised,” The Lighthill report gives a largely negative verdict on AI research in Great Britain and forms the basis for the decision by the British government to discontinue support for AI research in all but two universities. (1)

1973: Inventing the Internet, TCP/IP internetworking protocol, first sketched out in 1973 by Vint Cerf and Bob Kahn. (1, 3).

1974 The silver arm: David Silver at MIT designs the Silver Arm, a robotic arm to do small-parts assembly using feedback from delicate touch and pressure sensors. The arm´s fine movements approximate those of human fingers. (3)

1974

Ted Shortliffe‘s PhD dissertation on the MYCIN program (Stanford) demonstrated a very practical rule based approach to medical diagnoses, even in the presence of uncertainty. While it borrowed from DENDRAL, its own contributions strongly influenced the future of expert system development, especially commercial systems. (1)

Earl Sacerdoti developed one of the first planning programs, ABSTRIPS, and developed techniques of hierarchical planning. (1)

1975

Earl Sacerdoti developed techniques of partial-order planning in his NOAH system, replacing the previous paradigm of search among state space descriptions. NOAH was applied at SRI International to interactively diagnose and repair electromechanical systems. (3)

Austin Tate developed the Nonlin hierarchical planning system able to search a space of partial plans characterised as alternative approaches to the underlying goal structure of the plan.(2)

Marvin Minsky published his widely read and influential article on Frames as a representation of knowledge, in which many ideas about schemas and semantic links are brought together. (1)

Marvin Minsky published his widely read and influential article on Frames as a representation of knowledge, in which many ideas about schemas and semantic links are brought together. (1)

The Meta-Dendral learning program produced new results in chemistry (some rules of mass spectrometry) the first scientific discoveries by a computer to be published in a refereed journal. (1)

Mid 70’s

- Barbara Grosz (SRI) established limits to traditional AI approaches to discourse modeling. Subsequent work by Grosz, Bonnie Webber and Candace Sidner developed the notion of “centering”, used in establishing focus of discourse and anaphoric references in Natural language processing. (1,3)

- David Marr and MIT colleagues describe the “primal sketch” and its role in visual perception. (1)

- Alan Kay and Adele Goldberg (Xerox PARC) developed the Smalltalk language, establishing the power of object-oriented programming and of icon-oriented interfaces.

- David Marr and MIT colleagues describe the “primal sketch” and its role in visual perception.

1976

- Douglas Lenat‘s AM program (Stanford PhD dissertation) demonstrated the discovery model (loosely guided search for interesting conjectures). (1)

- Randall Davis demonstrated the power of meta-level reasoning in his PhD dissertation at Stanford. (1)

1976 Shigeo Hirose’s Soft Gripper: Shigeo Hirose´s Soft Gripper robot can conform to the shape of a grasped object, such as a wine glass filled with flowers. The design Hirose created at the Tokyo Institute of Technology grew from his studies of flexible structures in nature, such as elephant trunks and snake spinal cords. (3)

1976 Computer scientist Raj Reddy publishes “Speech Recognition by Machine: A Review” in the Proceedings of the IEEE, summarizing the early work on Natural Language Processing (NLP). (1)

1977: Director George Lucas’ film Star Wars is released. The film features C 3PO, a humanoid robot who is designed as a protocol droid and is “fluent in more than seven million forms of communication.” As a companion to C-3PO, the film also features R2-D2 – a small, astromech droid who is incapable of human speech (the inverse of C-3PO); instead, R2-D2 communicates with electronic beeps (2)

.

Its functions include small repairs and co-piloting starfighters.

Late 70’s

Stanford’s SUMEX-AIM resource, headed by Ed Feigenbaum and Joshua Lederberg, demonstrates the power of the ARPAnet for scientific collaboration. (3)

1978

Tom Mitchell, at Stanford, invented the concept of Version spaces for describing the search space of a concept formation program. (2)

Herbert A. Simon wins the Nobel Prize in Economics for his theory of bounded rationality, one of the cornerstones of AI known as “satisficing“. (1)

The MOLGEN program, written at Stanford by Mark Stefik and Peter Friedland, demonstrated that an object-oriented programming representation of knowledge can be used to plan gene cloning experiments. (1)

1978 The XCON (eXpert CONfigurer) program, a rule-based expert system assisting in the ordering of DEC’s VAX computers by automatically selecting the components based on the customer’s requirements, is developed at Carnegie Mellon University.

1978 Speak & Spell: Texas Instruments Inc. introduces Speak & Spell, a talking learning aid for children aged 7 and up. Its debut marked the first electronic duplication of the human vocal tract on a single integrated circuit. Speak & Spell used linear predictive coding to formulate a mathematical model of the human vocal tract and predict a speech sample based on previous input. It transformed digital information processed through a filter into synthetic speech and could store more than 100 seconds of linguistic sounds. (3)

1979

Mycin program, initially written as Ted Shortliffe’s Ph.D. dissertation at Stanford, was demonstrated to perform at the level of experts. Bill VanMelle’s PhD dissertation at Stanford demonstrated the generality of MYCIN’s representation of knowledge and style of reasoning in his EMYCIN program, the model for many commercial expert system “shells”. (1)

Jack Myers and Harry Pople at University of Pittsburgh developed INTERNIST, a knowledge based medical diagnosis program based on Dr. Myers’ clinical knowledge. (3)

Cordell Green, David Barstow, Elaine Kant and others at Stanford demonstrated the CHI system for automatic programming. (3)

BKG, a backgammon program written by Hans Berliner at CMU, defeats the reigning world champion (in part via luck). (1)

Drew McDermott and Jon Doyle at MIT, and John McCarthy at Stanford begin publishing work on non-monotonic logics and formal aspects of truth maintenance.(1)

1979: The Stanford Cart, a remote controlled, tv-equipped mobile robot was created by then- mechanical engineering grad student James L. Adams in 1961. In 1979, a “slider,” or mechanical swivel that moved the TV camera from side-to side, was added by Hans Moravec, then-PhD student. The cart successfully crossed a chair-filled room without human interference in approximately five hours, making it one of the earliest examples of an autonomous vehicle. The Stanford Cart was a long-term research project undertaken at Stanford University between 1960 and 1980. In 1979, it successfully crossed a room on its own while navigating around a chair placed as an obstacle. Hans Moravec rebuilt the Stanford Cart in 1977, equipping it with stereo vision. A television camera, mounted on a rail on the top of the cart, took pictures from several different angles and relayed them to a computer. The Stanford Cart, built by Hans Moravec, becomes the first computer-controlled, autonomous vehicle when it successfully traverses a chair-filled room and circumnavigates the Stanford AI Lab. The Stanford Cart successfully crosses a chair-filled room without human intervention in about five hours, becoming one of the earliest examples of an autonomous vehicle. (3)

AI in the 1980s

The rapid growth of artificial intelligence continued through the 1980s. Despite advancements and excitement behind AI, caution surrounded an inevitable “AI Winter,” a period of reduced funding and interest in artificial intelligence.

Lisp machines developed and marketed. First expert system shells and commercial applications. (1,3) First expert system shells and commercial applications. (3)

1980 First National Conference of the American Association for Artificial Intelligence (AAAI) held at Stanford. (3)

1980: WABOT-2 (Wabot-2) was built at Waseda University. This inception of the WABOT allowed the humanoid to communicate with people as well as read musical scores and play tunes of average difficulty on an electronic organ. (3)

1980

Lee Erman, Rick Hayes-Roth, Victor Lesser and Raj Reddy published the first description of the blackboard model, as the framework for the HEARSAY-II speech understanding system. (1)

First National Conference of the American Association of Artificial Intelligence (AAAI) held at Stanford. (3)

1981 Danny Hillis designs the connection machine, which utilizes Parallel computing to bring new power to AI, and to computation in general. (Later founds Thinking Machines Corporation) (3)

1981 The Japanese Ministry of International Trade and Industry budgets $850 million for the Fifth Generation Computer project. The project aimed to develop computers that could carry on conversations, translate languages, interpret pictures, and reason like human beings. (3)

1981 The direct drive arm: The first direct drive (DD) arm by Takeo Kanade serves as the prototype for DD arms used in industry today. The electric motors housed inside the joints eliminated the need for the chains or tendons used in earlier robots. DD arms were fast and accurate because they minimize friction and backlash. (3)

The Boston Global Forum * The Michael Dukakis Institute for Leadership and Innovation

AI World Society Innovation Network, AIWS.net, [email protected], 67 Mount Vernon St, Unit F, Boston, MA 02108

1982 The FRED: Nolan Bushnell founded Androbot with former Atari engineers to make playful robots. The “Friendly Robotic Educational Device” (FRED), designed for 6-15 year-olds, never made it to market. (3)

1982 The Fifth Generation Computer Systems project (FGCS), an initiative by Japan’s Ministry of International Trade and Industry, begun in 1982, to create a “fifth generation computer” (see history of computing hardware) which was supposed to perform much calculation utilizing massive parallelism. (3)

1983

John Laird and Paul Rosenbloom, working with Allen Newell, complete CMU dissertations on Soar (program). (1)

James F. Allen invents the Interval Calculus, the first widely used formalization of temporal events. (2)

1984: The film Electric Dreams, directed by Steve Barron, is released. The plot revolves around a love triangle between a man, woman, and a sentient personal computer called “Edgar.” (2)

1984: At the Association for the Advancement of Artificial Intelligence (AAAI), Roger Schank (AI theorist) and Marvin Minsky (cognitive scientist) warn of the “AI Winter”, predicting an immanent bursting of the AI bubble (which did happen

three years later), similar to the reduction in AI investment and research funding in the mid-1970s. The first instance where interest and funding for artificial intelligence research would decrease. (3)

1984 Electric Dreams is released, a film about a love triangle between a man, a woman and a personal computer. (2)

1984 The IBM 7535: Based on a Japanese robot, IBM’s 7535 was controlled by an IBM PC and programmed in IBM’s AML (“A Manufacturing Language”). It could manipulate objects weighing up to 13 pounds. (3)

1984 Hero Jr. robot kit: Heathkit introduces the Hero Jr. home robot kit, one of several robots it sells at the time. Hero Jr. could roam hallways guided by sonar, play games, sing songs and even act as an alarm clock. The brochure claimed it “seeks to remain near human companions” by listening for voices. (3)

Mid 80’s

Neural Networks become widely used with the Backpropagation algorithm algorithm (first described by Werbos in 1974), also known as the reverse mode of automatic differentiation published by Seppo Linnainmaa in 1970 and applied to neural networks by Paul Werbos.

1985 The autonomous drawing program, AARON, created by Harold Cohen, is demonstrated at the AAAI National Conference (based on more than a decade of work, and with subsequent work showing major developments).

1985 Denning Sentry Robot: Boston-based Denning designed the Sentry robot as a security guard patrolling for up to 14 hours at 3 mph. It radioed an alert about anything unusual in a 150-foot radius. The product, and the company, did not succeed.

1986 First driverless car, a Mercedes-Benz van equipped with cameras and sensors, built at Bundeswehr University in Munich under the direction of Ernst Dickmanns, drives up to 55 mph on empty streets.

1986 Barbara Grosz and Candace Sidner create the first computation model of discourse, establishing the field of research.

1986 LMI Lambda: The LMI Lambda LISP workstation is introduced. LISP, the preferred language for AI, ran slowly on expensive conventional computers. This specialized LISP computer, both faster and cheaper, was based on the CADR machine designed at MIT by Richard Greenblatt and Thomas Knight.

1986 Omnibot 2000: The Omnibot 2000 remote-controlled programmable robot toy could move, talk and carry objects. The cassette player in its chest recorded actions to be taken and speech to be played.

October 1986 David Rumelhart, Geoffrey Hinton, and Ronald Williams publish ”Learning representations by back-propagating errors,” in which they describe “a new learning procedure, back-propagation, for networks of neurone like units.”

1987 Mitsubisi Movemaster RM-501 Gripper is introduced: The Mitsubishi Movemaster RM-501 Gripper is introduced. This robot gripper and arm was a small, commercially available industrial robot. It was used for tasks such as assembling products or handling chemicals. The arm, including the gripper, had six degrees of freedom and was driven by electric motors connected to the joints by belts. The arm could move fifteen inches per second, could lift 2.7 pounds, and was accurate within .02 of an inch.

1987 Marvin Minsky published The Society of Mind, a theoretical description of the mind as a collection of cooperating agents. He had been lecturing on the idea for years before the book came out (c.f. Doyle 1983).

1987 Around the same time, Rodney Brooks introduced the subsumption architecture and behavior based robotics as a more minimalist modular model of natural intelligence; Nouvelle AI.

1987 Commercial launch of generation 2.0 of Alacrity by Alacritous Inc./Allstar Advice Inc. Toronto, the first commercial strategic and managerial advisory system. The system was based upon a forward chaining, self-developed expert system with 3,000 rules about the evolution of markets and competitive strategies and co-authored by Alistair Davidson and Mary Chung, founders of the firm with the underlying engine developed by Paul Tarvydas. The Alacrity system also included a small financial expert system that interpreted financial statements and models.

1987 The video Knowledge Navigator, accompanying Apple CEO John Sculley’s keynote speech at Educom, envisions a future in which “knowledge applications would be accessed by smart agents working over networks connected to massive amounts of digitized information.”

1988 Judea Pearl publishes Probabilistic Reasoning in Intelligent Systems. His 2011 Turing Award citation reads: “Judea Pearl created the representational and computational foundation for the processing of information under uncertainty. He is credited with the invention of Bayesian networks, a mathematical formalism for defining complex probability models, as well as the principal algorithms used for inference in these models. This work not only revolutionized the field of artificial intelligence but also became an important tool for many other branches of engineering and the natural sciences.”

1988 Rollo Carpenter develops the chat-bot Jabberwacky to “simulate natural human chat in an interesting, entertaining and humorous manner.” It is an early attempt at creating artificial intelligence through human interaction.

1988 Members of the IBM T.J. Watson Research Center publish “A statistical approach to language translation,” heralding the shift from rule based to probabilistic methods of machine translation, and reflecting a broader shift to “machine learning” based on statistical analysis of known examples, not comprehension and “understanding” of the task at hand (IBM’s project Candide, successfully translating between English and French, was based on 2.2 million pairs of sentences, mostly from the bilingual proceedings of the Canadian parliament).

1988 Marvin Minsky and Seymour Papert publish an expanded edition of their 1969 book Perceptrons. In “Prologue: A View from 1988” they wrote: “One reason why progress has been so slow in this field is that researchers unfamiliar with its history have continued to make many of the same mistakes that others have made before them.”

1988: Computer scientist and philosopher Judea Pearl published “Probabilistic Reasoning in Intelligent Systems.” Pearl is also credited with inventing Bayesian networks, a “probabilistic graphical model” that represents sets of variables and their dependencies via directed acyclic graph (DAG).

1988: Rollo Carpenter, programmer and inventor of two chatbots, Jabberwacky and Cleverbot (released in the 1990s), developed Jabberwacky to “simulate natural human chat in an interesting, entertaining and humorous manner.” This is an example of AI via a chatbot communicating with people.

1989 Yann LeCun and other researchers at AT&T Bell Labs successfully apply a backpropagation algorithm to a multi-layer neural network, recognizing handwritten ZIP codes. Given the hardware limitations at the time, it took about 3 days (still a significant improvement over earlier efforts) to train the network.

Dean Pomerleau at CMU creates ALVINN (An Autonomous Land Vehicle in a Neural Network), which grew into the system that drove a car coast-to-coast under computer control for all butabout 50 of the 2850 miles. The development of metal–oxide–semiconductor (MOS) very-large-scale integration (VLSI), in the form of complementary MOS (CMOS) technology, enabled the development ofpractical artificial neural network (ANN) technology in the 1980s. A landmark publication in the field was the 1989 book Analog VLSI Implementation of Neural Systems by Carver A. Mead and Mohammed Ismail.

1989 Computer defeats master chess player: David Levy is the firstmaster chess player to be defeated by a computer. The program Deep Thought defeats Levy who had beaten all other previous computer counterparts since 1968.

AI in the 1990s

The end of the millennium was on the horizon, but this anticipation only helped artificial intelligence in its continued stages of growth.

1990’s

Major advances in all areas of AI, with significant demonstrations in machine learning, intelligent tutoring, case-based reasoning, multi-agent planning, scheduling, uncertain reasoning, data mining, natural language understanding and translation, vision, virtual reality, games, and other topics.

Early 1990s TD-Gammon, a backgammon program written by Gerry Tesauro, demonstrates that reinforcement (learning) is powerful enough to create a championship-level game-playing program by competing favorably with world-class players.

Rod Brooks’ COG Project at MIT, with numerous collaborators, makes significant progress in building a humanoid robot EQP theorem prover at Argonne National Labs proves the Robbins Conjecture in mathematics (October-November, 1996). The Deep Blue chess program beats the current world chess champion, Garry Kasparov, in a widely followed match and rematch (See Deep Blue Wins). (May 11th, 1997). NASA’s pathfinder mission made a successful landing and the first autonomous robotics

system, Sojourner, was deployed on the surface of Mars. (July 4, 1997)

First official Robo-Cup soccer match (1997) featuring table-top matches with 40 teams of

interacting robots and over 5000 spectators.

Web crawlers and other AI-based information extraction programs become essential in widespread use of the world-wide-web.

Demonstration of an Intelligent Room and Emotional Agents at MIT’s AI Lab. Initiation of work on the Oxygen Architecture, which connects mobile and stationary computers in an adaptive network.